Analytica House

Sep 4, 2022Log File Analysis for SEO Performance

From an SEO performance perspective, log file analysis—and specifically examining the server’s access logs—tells us exactly how search engine bots behave after they crawl our site.

In this article, we’ll answer “How do you perform a detailed log file analysis for SEO?” and “What are the benefits of log analysis?” using various scenario-based examples.

What Is a Log File?

Log files record who accessed your site, when, from which IP, and which URLs they requested.

“Visitors” includes not only human users but also Googlebot and other search engine crawlers.

Your web server writes these logs continuously and rotates or overwrites them after a set period.

What Data Does a Log File Contain?

A typical access log entry looks like this:

27.300.14.1 – – [14/Sep/2017:17:10:07 -0400] “GET https://allthedogs.com/dog1/ HTTP/1.1” 200 “https://allthedogs.com” “Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)”

Depending on your server configuration, you might also log response size or request latency. A breakdown of the key fields:

- IP address of the requester

- Timestamp of the request

- Method (GET or POST)

- Requested URL

- HTTP status code (see HTTP status codes)

- User-Agent string, telling you the client or crawler type

Googlebot IP Address List

In November 2021, Google published the full list of IP ranges it uses to crawl websites. You can find the JSON here:

https://developers.google.com/search/apis/ipranges/googlebot.json

Where to Find Your Log Files

Logs live on your web server or—in some cases—on your CDN. How you access them depends on your server stack (nginx, Apache, IIS) and control panel. For example:

cPanel: /usr/local/apache/logs/ or via the “Raw Access Logs” feature

Plesk: /var/www/vhosts/system/your-domain.com/logs/

Why Is Log Analysis Important for SEO?

Analyzing logs tells you exactly which URLs search bots actually fetch—and whether they encounter errors.

Unlike tools like Screaming Frog or DeepCrawl, which follow links, bots revisit URLs they’ve previously seen. If a page existed two days ago but now 404s, only logs reveal that mismatch.

Log analysis can show you:

- Which pages bots crawl most often (and which they ignore)

- Whether bots encounter 4xx or 5xx errors

- Which orphaned (unlinked) pages are still being crawled

Tools for Log File Analysis

Popular log analysis tools include:

- Splunk

- Logz.io

- Screaming Frog Log File Analyser (free up to 1,000 lines)

- Semrush Log File Analyzer

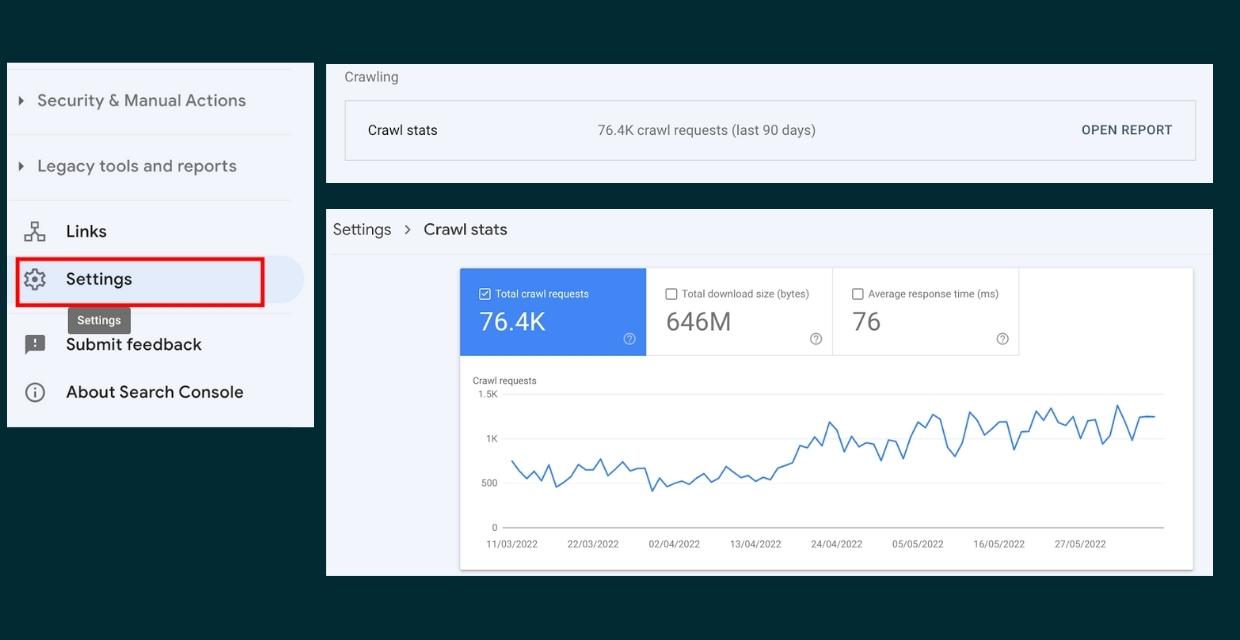

GSC Crawl Stats vs. Logs

Google Search Console’s Crawl Stats report shows some high-level crawl data, but it’s nowhere near as granular as raw logs.

To access it: Settings > Crawling > Open report.

Interpreting Crawl Stats Status

- All good: No crawl errors in the last 90 days

- Warning: An error occurred more than 7 days ago

- Critical: Errors detected within the last 7 days

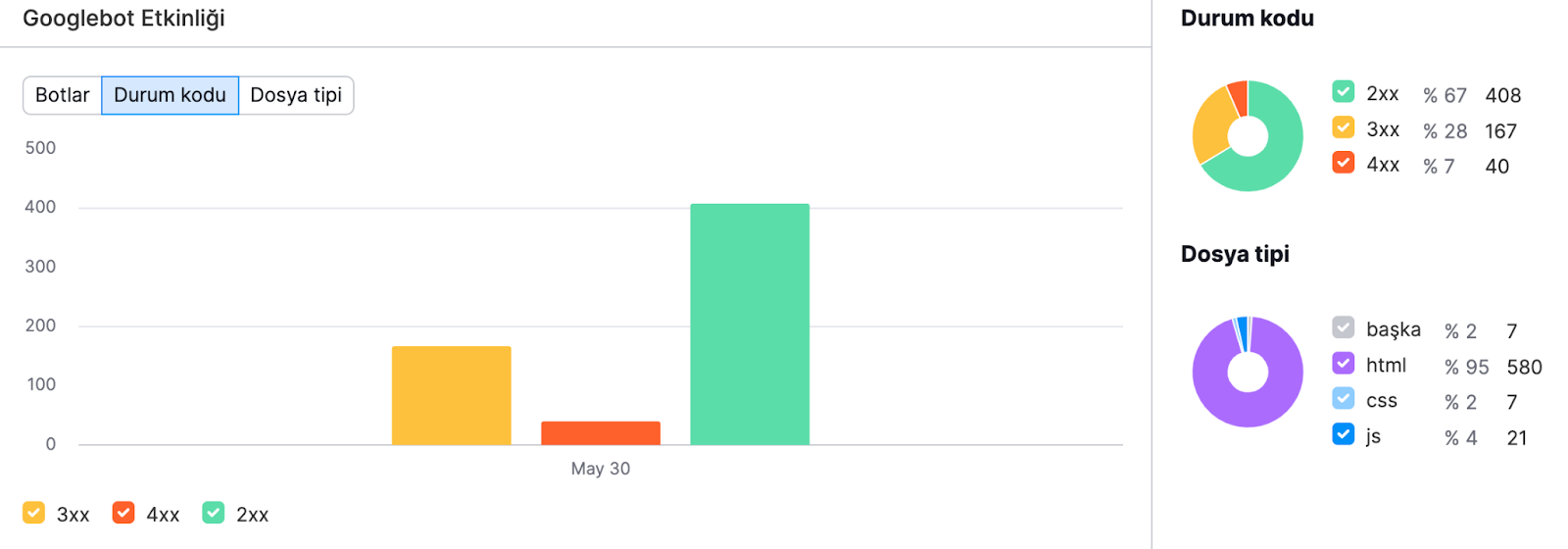

The report also breaks down by status code, file type, and crawler type (desktop, smartphone, AdsBot, ImageBot, etc.).

How to Interpret Your Log Analysis

With logs, you can answer:

- What percentage of my site do bots actually crawl?

- Which sections never get crawled?

- How deep into my site do bots venture?

- How often do they revisit updated pages?

- How quickly are new pages discovered?

- Did a site structure change affect crawl patterns?

- Are resources (CSS, JS) delivered quickly?

7 Example Scenarios

Here are seven practical ways log analysis can inform your SEO strategy:

1. Understand Crawl Behavior

Check which status codes bots see most often and which file types they request. For example, in Screaming Frog’s log report:

- 2xx codes on HTML/CSS/JS are ideal—pages load successfully.

- 4xx codes indicate broken links or removed pages—these can waste crawl budget.

- 3xx codes show redirects—make sure only necessary redirects remain.

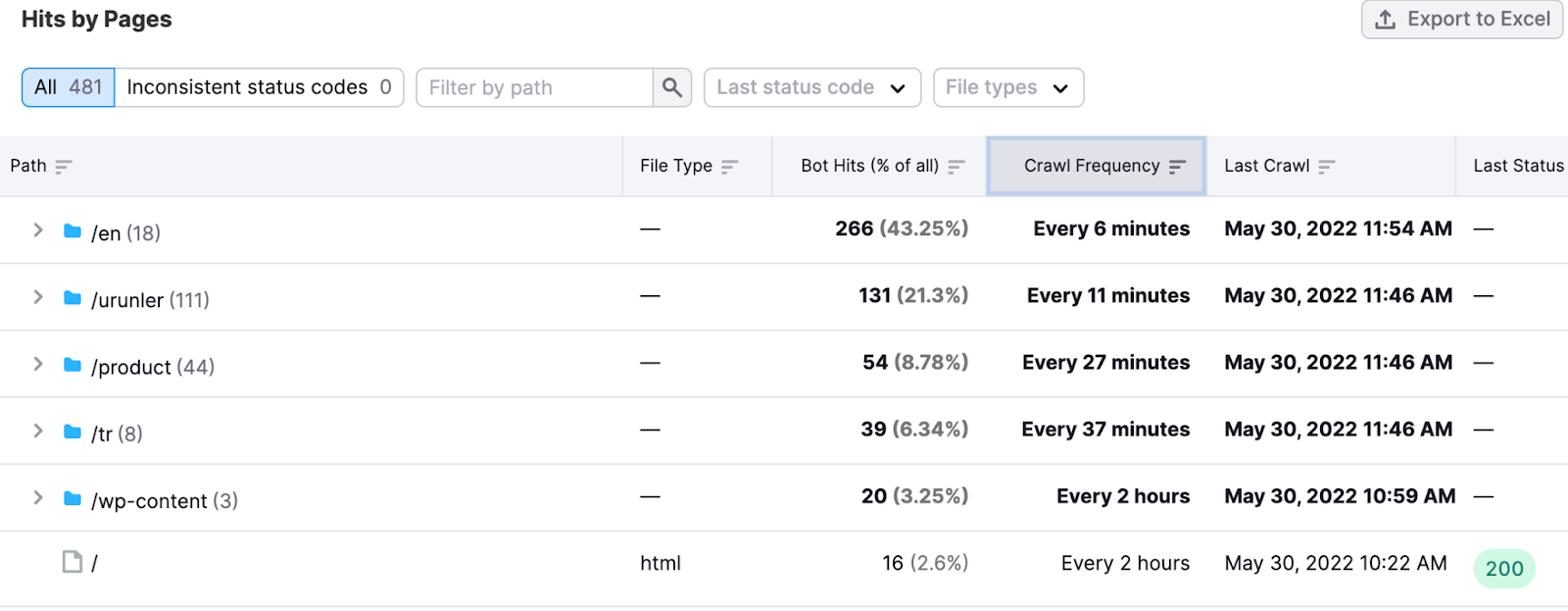

2. Identify Your Most Important Pages

Bots allocate more crawl budget to pages with more internal or external links. If you see excessive crawl on an English subdirectory, for instance, you might adjust your navigation or internal linking.

3. Optimize Crawl Budget

Even smaller sites benefit from eliminating waste. Use logs to find URLs with unnecessary parameter crawls, frequent 301s, or robots.txt misconfigurations.

- Remove parameterized URLs from your sitemap and internal links.

- Set long Cache-Control headers for static assets.

- Consolidate multiple redirects into a single chain.

- Fix robots.txt typos so bots respect your crawl rules.

4. Detect Crawl Errors

High volumes of 4xx/5xx responses can cause bots to throttle or stop crawling. Compare 2xx vs. error rates and fix broken pages promptly.

5. Find Crawlable but Unindexed Pages

Pages eligible for indexing but rarely crawled can be found by filtering logs on low crawl frequency—e.g. “XX weeks ago.”

Add these pages to your sitemap, link to them internally, and refresh their content.

6. Discover Orphan Pages

Orphan pages (unlinked) may still appear in logs. Filter logs for 200-OK HTML URLs with zero internal referrers. Then add internal links or remove them if they aren’t needed.

7. Aid Site Migrations

During a migration, logs show your most-crawled URLs so you can prioritize preserving their redirect paths. After migration, logs reveal which URLs bots can no longer find.

Using Log Analysis to Drive SEO Fixes

By acting on your log insights, you can:

- Remove non-200 URLs from your sitemap

- Noindex or disallow low-value pages

- Ensure canonical tags highlight key pages

- Boost crawl frequency by adding internal links to strategic pages

- Guarantee all internal links point to indexable URLs

- Free up crawl budget for new and updated content

- Verify category pages are crawled regularly

Conclusion

Log file analysis is a powerful way to discover hidden SEO issues and refine your crawling strategy. If you found this guide helpful, please share it on social media so others can benefit!

More resources

Generative Engine Optimization (GEO) in the Financial Sector: YMYL Risks and Trust Signals

With the integration of artificial intelligence technologies into the search engine ecosystem, the t...

B2B SaaS Generative Engine Optimization (GEO): A Content and Measurement Model That Increases Demo Requests

The digital marketing world is undergoing a major evolution from traditional search engine optimizat...

What Is a Source Term Vector?

A Source Term Vector is a conceptual expertise profile that shows which topics a website is associat...