Analytica House

Sep 4, 2022Scan Budget Optimization in 7 Steps

How to optimize crawl budget for search engine bots on your website? In this blog post, you will learn 7 useful tips to help adjust your site so that it can be crawled more easily by crawlers (spiders, bots).

Crawl budget optimization is a set of optimization practices that ensure more important pages, which provide value to users, are crawled instead of unimportant pages frequently crawled by search engine bots. This helps rank higher in search engine results pages. You can read the content titled Google managing crawl budget for large sites to get detailed information about these practices.

What is Crawl Budget?

We can define crawl budget as the frequency and duration of search engine bots visiting our web page. There are millions of URLs on the internet, and therefore, the time and resources that search engine bots, especially Google, will spend crawling these URLs are limited. Since the time allocated by search engine bots to a site is limited, there is no guarantee that each URL discovered during a crawl will be indexed.

If you do not ignore crawl budget optimization, you can increase the frequency and time search engine bots visit your website. The more frequently Googlebot visits a website, the faster new and updated content will be indexed.

When determining crawl budget, Google evaluates two factors, crawl capacity and crawl demand, to determine the time it will spend on a domain. If both values are low, Googlebot crawls your site less frequently. Unless we are talking about a very large website with many pages, neglecting crawl budget optimization is possible.

Why is Crawl Budget Optimization Neglected?

The fact that crawl budget is not a ranking factor on its own may cause some experts to neglect it. Google’s official blog post on this topic answers this question. According to Google, if you don’t have millions of web pages, you don’t need to worry about crawl budget. However, if your website is like Amazon, Trendyol, Hepsiburada, or N11, with millions of pages, then crawl budget optimization is a must.

How Can You Optimize Your Crawl Budget Today?

Optimizing the crawl budget for your website means fixing the issues that waste time and resources during crawling.

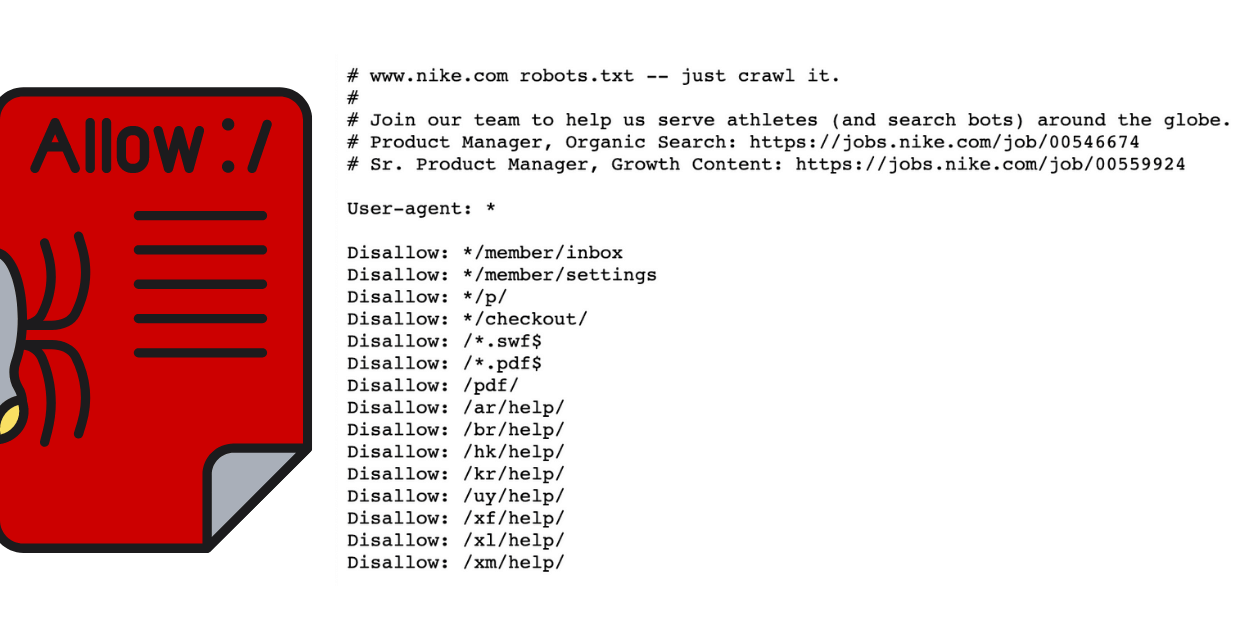

1. Configure the Robots.txt File

When Googlebot and other search engine bots visit our website, the first place they check is the robots.txt file. Search engine bots follow the instructions in the robots.txt file during crawling. Therefore, you should configure the file to allow crawling of important pages and block unimportant ones.

Robots.txt example for crawl budget optimization:

user-agent: * disallow: /cart disallow: /wishlist

In the robots.txt example above, it is stated that dynamically generated pages on the website should not be crawled.

2. Watch Out for Redirect Chains

Imagine entering a website and being redirected from category X to category Y, and then to category Z, just to reach a product. Annoying, right? And it also took quite a while to reach the product.

The example above negatively affects both user experience and search engine bots. Just as you were redirected from one page to another, when search engine bots are redirected before crawling a page, this is called a "redirect chain." SEO experts recommend having no redirects at all on your website.

Redirect chains formed by URLs pointing to each other will damage the site’s crawl limit. In some cases, if there are too many redirects, Google may end the crawl without indexing important pages that should have been indexed.

3. Prefer HTML if Possible

HTML is supported by all browsers. Although Google has been improving in parsing and rendering JavaScript in recent years, it still cannot fully crawl it. Other search engines are unfortunately not yet at Google’s level.

When Googlebot encounters a JavaScript website, it processes it in three stages:

- Crawling

- Rendering

- Indexing

Googlebot first crawls a JS page, then renders it, and finally indexes it. In some cases, when Googlebot queues pages for crawling and rendering, it may not be clear which step will be performed first. Therefore, if you prefer HTML on your website, you send a good signal to search engines both for crawling and for understanding.

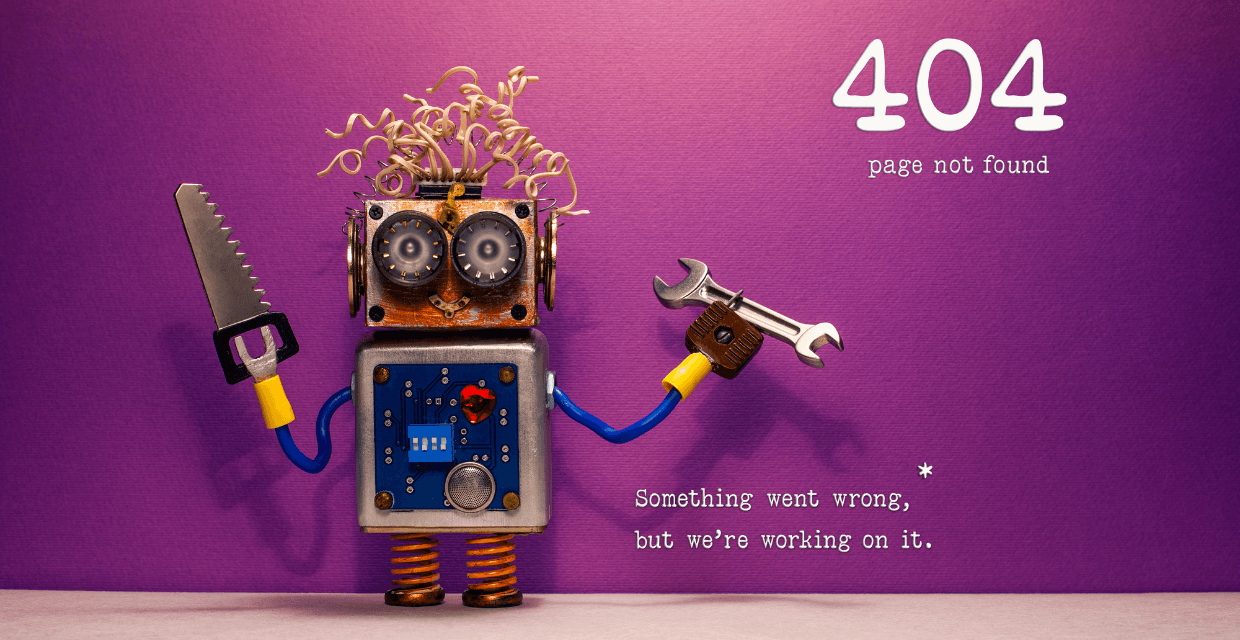

4. Eliminate HTTP Errors

When optimizing a website’s crawl budget, you need to eliminate HTTP error status codes. On a website, 404 and 410 HTTP status codes negatively affect your crawl budget.

This not only negatively affects search engines but also user experience. You should update or remove URLs with 4XX and 5XX HTTP status codes on your website. Not only "resource not found" and server errors but also URLs with 3XX status codes should be updated.

Popular tools such as DeepCrawl and Screaming Frog SEO Spider, widely used by SEO experts, crawl your website’s URLs and classify them by the HTTP status code they return.

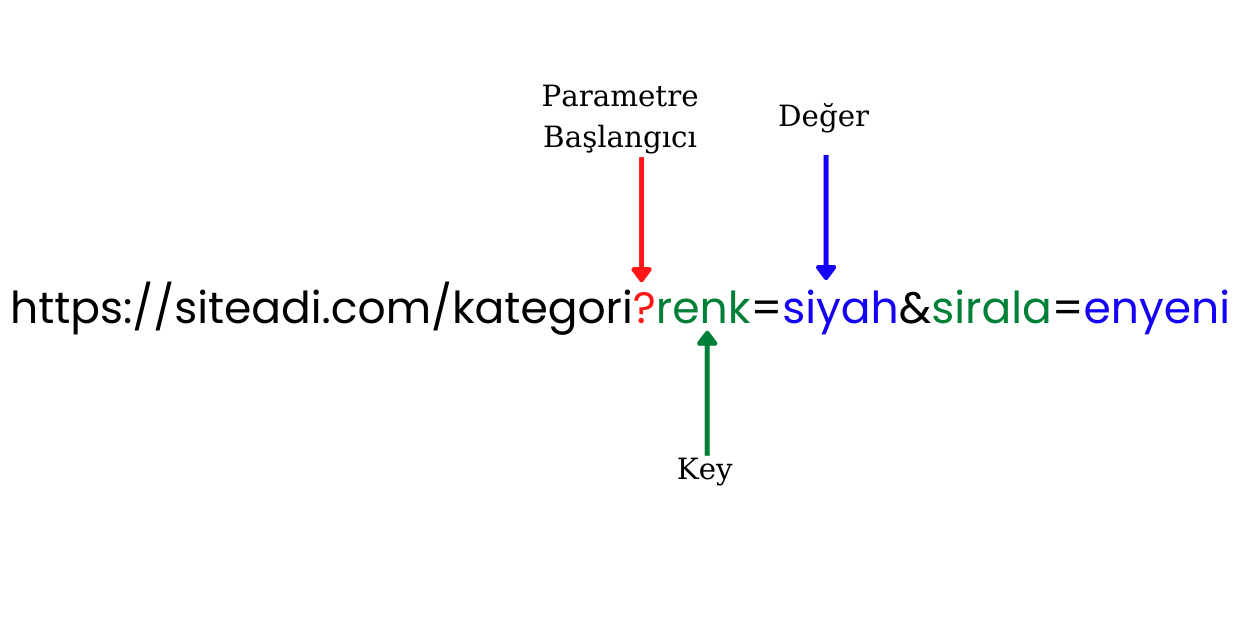

5. Use of URL Parameters

Each URL on a website is considered a separate page by search engine bots, and this is one of the biggest wastes of crawl budget. Blocking parameterized URLs from being crawled and notifying Googlebot and other search engines will help save your crawl budget and solve duplicate content problems.

In the past, the URL Parameters Tool in Google Search Console allowed us to specify parameters on our site to help Google. However, as Google has improved, it has become better at determining which parameters are important or not. As of April 26, 2022, Google has deprecated the URL Parameters Tool.

The best alternative SEO practice instead of the URL Parameters tool is, of course, the robots.txt file, where you can specify disallowed parameters with the “disallow:” directive. Also, if you have a multilingual site, you can use hreflang tags for language variations in the URL.

6. Keep Your Sitemap Updated

All URLs listed in the website’s sitemap must be crawlable and indexable by search engine bots. On large websites, search engine bots rely on sitemaps to discover and crawl new and updated content. Keep your sitemap updated to ensure that bots use your crawl budget efficiently when visiting your site.

If you don’t want to waste crawl budget, follow these rules for sitemaps:

- URLs with noindex tags should not be in the sitemap,

- Only URLs with 200 HTTP status codes should be listed,

- URLs disallowed in the robots.txt file should not be included,

- Only canonical URLs that self-reference should be included,

- URLs in the sitemap should be listed in full form,

- If your site has separate mobile and desktop URL structures, we recommend listing only one version.

Taking care of the sitemap will give us an advantage in terms of crawl budget. Search engine bots will easily understand where internal links are pointing and save time.

Always make sure that you provide the sitemap path in the robots.txt file and that it is correct.

7. Use Hreflang Tags

If you want to control crawl budget and indexing, provide Google with information about the versions of your pages in different languages. Search engine bots use hreflang tags to crawl other versions of your pages.

Example hreflang code:

Incorrect hreflang implementation or usage on a web page will cause serious crawl budget issues.

Conclusion

Crawl budget optimization is often seen as something only large website owners should worry about. However, paying attention to the steps listed above can significantly benefit your site.

The 7 tips for optimizing crawl budget for search engine bots that we discussed above will hopefully make your job easier and help improve your SEO performance. By optimizing crawl budget, we direct Googlebot to the important pages on our website.

More resources

Generative Engine Optimization (GEO) in the Financial Sector: YMYL Risks and Trust Signals

With the integration of artificial intelligence technologies into the search engine ecosystem, the t...

B2B SaaS Generative Engine Optimization (GEO): A Content and Measurement Model That Increases Demo Requests

The digital marketing world is undergoing a major evolution from traditional search engine optimizat...

What Is a Source Term Vector?

A Source Term Vector is a conceptual expertise profile that shows which topics a website is associat...