Burcu Aydoğdu

Apr 30, 202519 SEO Tips to Get Impressions on Google AI Overview

In today’s digital world, artificial intelligence and Google AI systems play an important role in determining the visibility of content in search results. To ensure that your website is visible on Google AI and other artificial intelligence platforms, you need to properly optimize your SEO strategies. The way AI correctly understands, crawls, and indexes your content can directly affect your rankings. Therefore, it is a critical step to make your content suitable not only for human users but also for AI systems.

To gain visibility in Google AI Overview, what you need to do requires not only applying basic SEO techniques but also understanding how artificial intelligence evaluates your content. In this article, we will share 19 effective SEO tips to gain more visibility on Google AI platforms.

Thanks to these tips, it will be possible to make your content easier to understand for AI, faster to index, and more likely to appear in higher rankings. Below, you will find important steps to lay the foundations of creating AI-friendly content:

- Making your content AI-compatible: what needs to be done for AI systems to quickly crawl and understand your content.

- Optimizing page structure: the importance of SEO- and AI-compatible site design.

- Practical tips for content optimization: how to optimize titles, meta descriptions, and content for Google AI.

Artificial intelligence directly crawls and analyzes your web content, shaping search results. Therefore, to gain visibility in Google AI Overview, your content must be fully accessible and optimized.

We have gathered SEO tips to gain visibility in Google AI Overview under the following main headings.

- Robots.txt and Bot Protection Settings

- HTML Structure and Accessibility Tips

- Creating Content in HTML

- Using "Agent-Responsive Design"

- Providing Programmatic & Automatic Access via Indexing API, RSS Feeds

- Creating and Submitting a /llms.txt File

- NLP Optimization

- Regularly Sharing Content & Updating to Gain Authority in the Target Industry

- Visibility Check on AI Platforms

- Using Structured Data

- Updating Existing Content

- Using OpenGraph Tags

In addition, you can check out our content What is Google AI Overview for more detailed information.

1. Using the "Allow" Command for AI Platforms in Robots.txt

For the content on your website to be crawled properly by AI platforms, you need to add the "Allow" command to your robots.txt file for the user-agents of the relevant AI platforms. This allows bots and AI tools to access your site, enabling your content to be displayed on these platforms.

For example, sites like https://darkvisitors.com/agents list the user-agent information of different AI platforms. By using these sources, you can identify the relevant user-agents and grant them special permissions in your robots.txt file. This simple setting helps search engines and other AI systems effectively crawl your website.

You can find an example of the command list for this process below.

User-agent: OAI-SearchBot

User-agent: ChatGPT-User

User-agent: PerplexityBot

User-agent: FirecrawlAgent

User-agent: AndiBot

User-agent: ExaBot

User-agent: PhindBot

User-agent: YouBot

Allow: /

NOTE: If the Allow command is not added to your robots.txt file, these bots will still be allowed unless otherwise specified.

2. No Bot Protection and Access Barriers

It is important that aggressive bot protection features in services like Cloudflare or AWS WAF are not active, so that AI tools and bots can access your website without problems. Although such security measures are designed to block bots, they may prevent AI platforms from accessing the right data.

You must ensure that the bot protection systems integrated into your site do not place unnecessary restrictions on AI tools. These adjustments will make it easier for AI to interact with your content and help improve your rankings.

In Cloudflare or AWS WAF configurations, it is important to identify AI user-agents and allow these bots to access your site without encountering security barriers. For example:

- In Cloudflare, under bot management, special permissions can be defined for AI platforms.

- In AWS WAF, custom rules can be created to allow only specific user-agents.

3. HTML Structure and Accessibility Tips

The correct use of HTML tags is a critical step in helping AI systems better understand your content. Since HTML tags indicate the hierarchy and meaning of the content, misused or missing tags can mislead AI. For example:

- Title Tags (h1, h2, h3): Using headings correctly helps AI understand the main topics and subtopics.

- Alt Attributes: Adding descriptive alt text to images enables AI to understand visual content as well.

- Semantic HTML: Using elements such as <article>, <section>, <nav> helps both search engines and AI platforms interpret the structure of the page more accurately.

Accessibility improvements also make your site both more user-friendly and more AI-compatible. For example, adding ARIA labels ensures that both AI and assistive technologies can correctly interpret your content.

4. Creating Content in HTML Instead of JavaScript

It is very important to create your website’s content directly in HTML instead of JavaScript, so that AI platforms can crawl and index it easily. When content is loaded via JavaScript, it can often be invisible to AI bots or may not be processed correctly.

Since HTML content is directly crawlable, it allows AI systems to understand your content more easily and present it more accurately. In particular, Googlebot and similar AI bots are more successful at reading static HTML compared to dynamically loaded content.

Instead of producing critical content (text, product information, descriptions) with JavaScript, making it visible directly in HTML will help your rankings.

5. Using “Agent-Responsive Design”

Agent-Responsive Design means optimizing not only for human users but also for bots and AI systems. Thanks to this design approach, AI tools and bots can access your site more easily, and your content is interpreted more accurately.

Just as responsive design adapts to different screen sizes, Agent-Responsive Design provides compatibility for different agents (search engines, AI bots, crawlers). In this way:

- AI bots can access simplified and structured versions of your content.

- Critical content (title, description, visual information) can be prioritized for AI agents.

- Page performance is optimized for both users and bots.

6. Providing Programmatic Access with Indexing API and RSS Feeds

To ensure that your content is crawled quickly and systematically by AI tools, you need to provide programmatic access. The most common methods for this are:

- Indexing API: An API provided by Google that allows you to notify Google of new or updated content on your website instantly. This enables AI platforms to access fresh content more quickly.

- RSS Feeds: By offering your content in RSS format, you allow AI tools and bots to automatically receive updates. This way, your new content can be indexed and displayed more quickly.

Programmatic access ensures that your content is indexed correctly and helps AI platforms to scan your website more effectively.

7. Creating and Submitting an /llms.txt File

The /llms.txt file is a configuration file you can add to your website to make it easier for AI systems to access your content. Similar to robots.txt, this file allows AI platforms to find and crawl your site’s data more easily.

By including an /llms.txt file in the root directory of your website, you can show which content is accessible for AI systems. This allows you to directly control your site’s relationship with AI bots.

Example structure:

/llms.txt

Sitemap: https://example.com/sitemap.xml

Allow: /content/

Disallow: /private/

With this method, you can both direct AI platforms and improve indexing performance.

8. NLP Optimization

To make your content more AI-friendly, you should optimize it for Natural Language Processing (NLP). NLP helps AI systems understand your content more clearly and semantically.

Things to consider for NLP optimization include:

- Clear and understandable sentences: Using short and direct sentences helps AI understand your content correctly.

- Using synonyms and related terms: Helps AI recognize semantic connections.

- Structured writing: Ensuring logical order of paragraphs and topics makes it easier for AI to analyze.

NLP optimization both makes your content more understandable for users and allows AI systems to interpret it correctly.

9. Regular Content Sharing & Updating to Gain Authority in the Target Industry

Regularly publishing and updating your content is one of the most effective ways to gain authority in your target industry. AI platforms favor up-to-date and frequently updated content because it increases reliability and relevance.

- Regular updates: Ensuring that existing content is up-to-date.

- Sharing new content: Continuously adding new blog posts, guides, and news articles.

- Industry-specific content: Focusing on current trends, problems, and solutions in your sector.

By doing this, you both strengthen your authority in your industry and increase the chance for your content to be prioritized by AI systems.

10. Visibility Check on AI Platforms

To check how well your content is indexed and displayed by AI platforms, you need to regularly conduct visibility checks. Tools like Google Search Console help you monitor how your content is crawled and ranked.

Additionally, you can check visibility across other AI platforms (ChatGPT, Perplexity, etc.) using different SEO tools. These checks give you the chance to see how your site is perceived by AI and allow you to make improvements when necessary.

11. Using Structured Data

Using structured data allows AI systems to better understand your content. By using schema.org markup, you can provide more detailed information to search engines and AI platforms.

For example:

- Product information

- Reviews and ratings

- FAQs

- Recipes

Structured data makes your content more understandable to AI and allows it to be displayed in enriched formats (rich snippets) in search results.

12. Updating Existing Content

Keeping your old content up-to-date and optimized is an important factor in maintaining your site’s overall authority. Content that becomes outdated over time can negatively affect your rankings.

- Updating statistics and data: Ensuring that the latest information is included.

- Adding new subtopics: Expanding old content with new developments.

- Correcting broken links: Fixing or updating links that no longer work.

Updated content is better evaluated by both search engines and AI platforms, which helps improve your visibility.

13. Using OpenGraph Tags

OpenGraph tags ensure that your content is displayed correctly when shared on social media and other platforms. These tags also help AI platforms understand your content better.

Key OpenGraph tags include:

- og:title (Content title)

- og:description (Content description)

- og:image (Featured image)

- og:url (Page URL)

By using these tags correctly, you both improve your social media visibility and make your content easier to interpret by AI systems.

Conclusion

It is becoming increasingly important to make your website compatible not only with human users but also with AI systems. To gain visibility in Google AI Overview and similar platforms, you must apply the right SEO strategies.

In this article, we shared 19 effective SEO tips for becoming more visible on Google AI platforms. These steps will make your content more AI-friendly, help it get indexed more quickly, and increase your chances of achieving higher rankings.

For detailed information, you can also check out our article What is Google SEO.

More resources

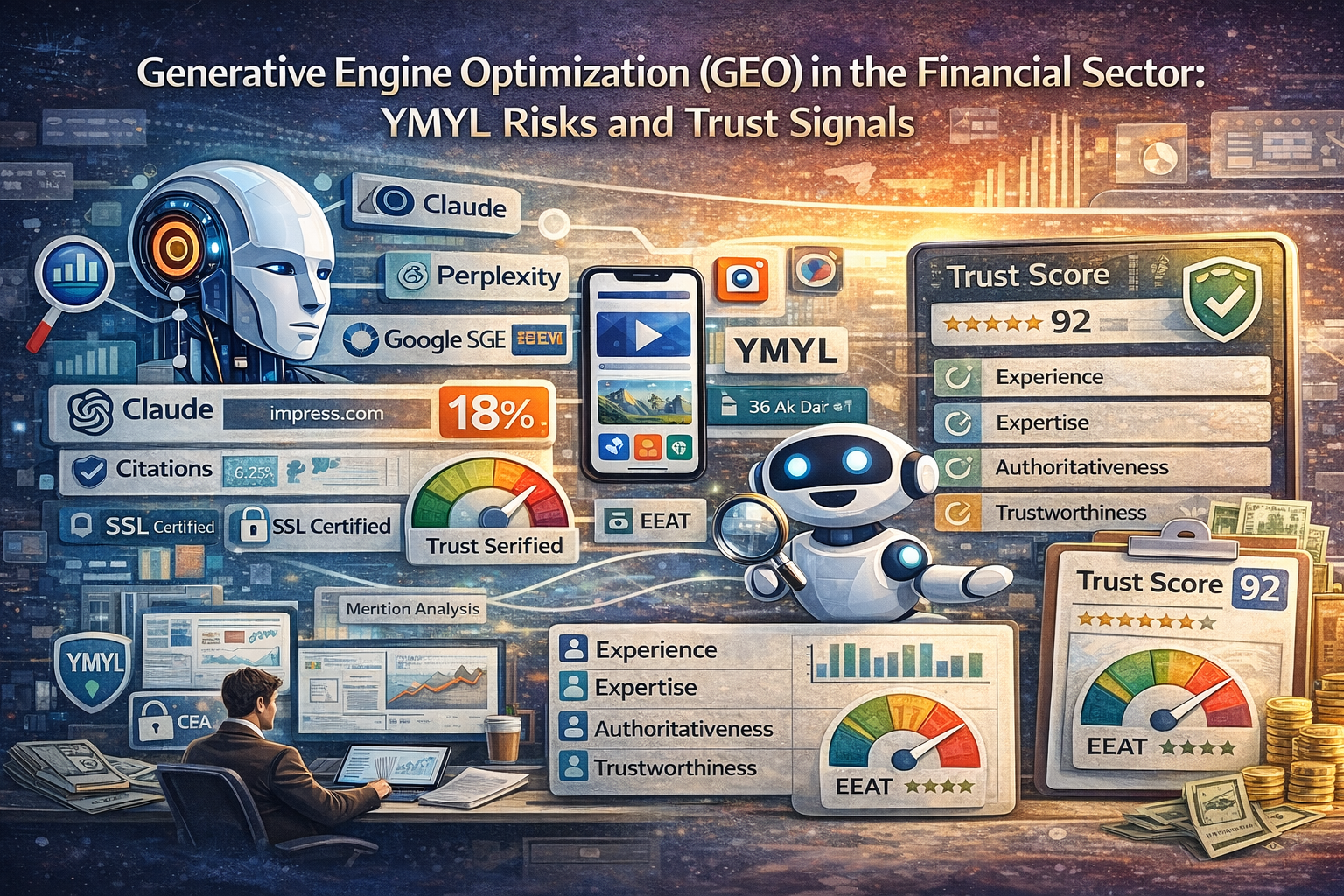

Generative Engine Optimization (GEO) in the Financial Sector: YMYL Risks and Trust Signals

With the integration of artificial intelligence technologies into the search engine ecosystem, the t...

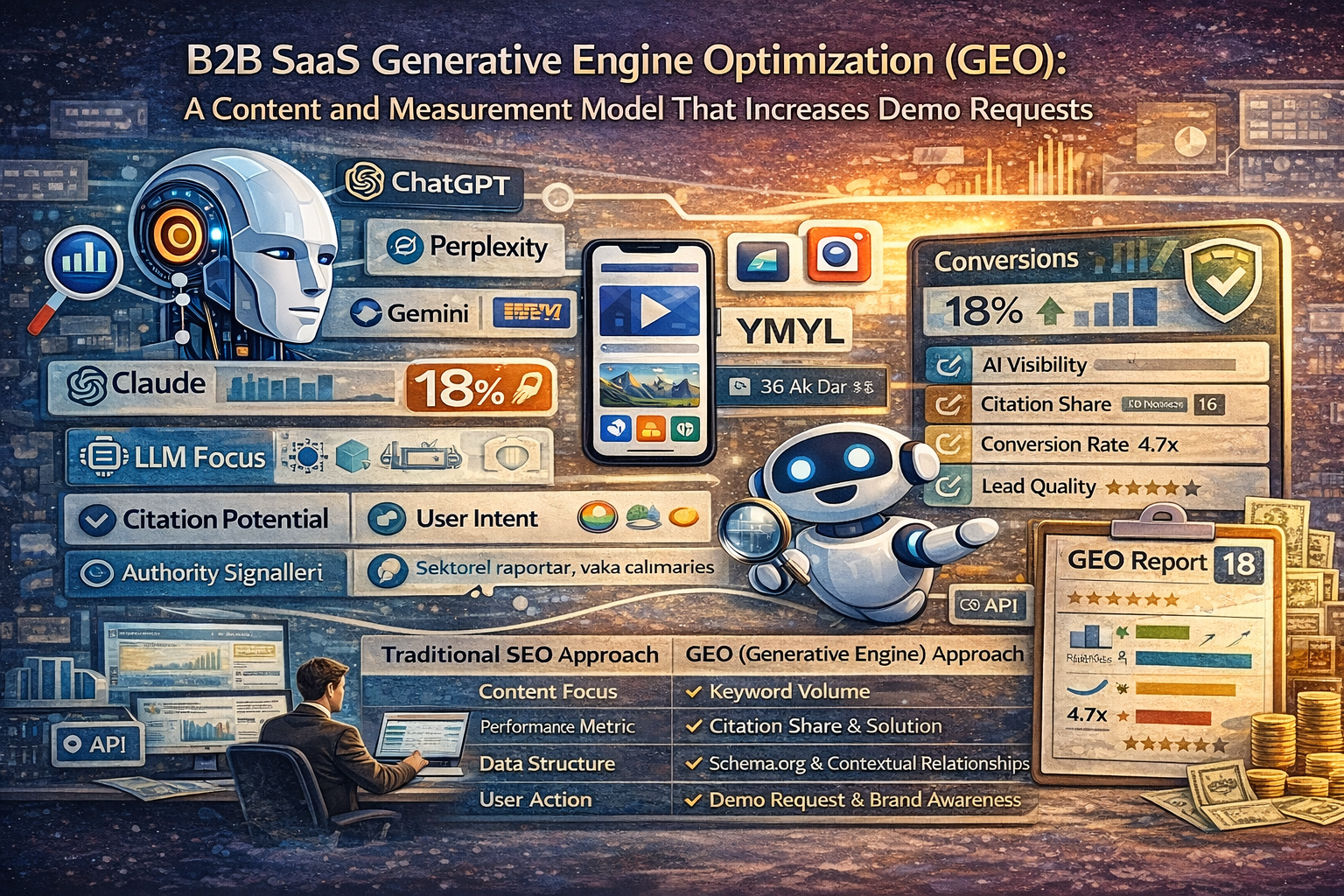

B2B SaaS Generative Engine Optimization (GEO): A Content and Measurement Model That Increases Demo Requests

The digital marketing world is undergoing a major evolution from traditional search engine optimizat...

What Is a Source Term Vector?

A Source Term Vector is a conceptual expertise profile that shows which topics a website is associat...