Analytica House

Eyl 3, 2022Google Index Nedir, Neden Önemlidir ve Nasıl Optimize Edilir?

Arama motorları, kullanıcıların ihtiyaç duyduğu sorgulara ilişkin en alakalı sonuçları ortaya çıkarmak için 3 adımlı bir süreç izler. Sırasıyla bu adımlar Tarama (Crawling), Dizine Ekleme (Indexing) ve Sıralama ve Sunma (Ranking and Serving) adımlarından oluşur.

Tarama (Crawling): Arama motoru botlarının internet üzerindeki mevcut bilgilere ulaşırken web sitelerini keşfetme sürecidir. Tarama sırasında botlar, web sitelerine gömülü bağlantıları takip eder ve yeni sitelere ulaşır. Web sitelerinde bulunan bağlantılar sayesinde, botlar çevrimiçi milyarlarca siteyi ziyaret ederek tarama yapar.

- Dizine Ekleme (Indexing): Botların ziyaret edilen web sitelerini bir veri depolama sistemine kaydetme sürecidir. Dizine ekleme, taramadan hemen sonra gelen ikinci adımdır.

- Sıralama ve Sunma (Ranking and Serving): Kullanıcıların aramalarına en uygun web sitelerini listeleyen Arama Motoru Sonuç Sayfası’nın (SERP) nihai sonucudur. Bu sıralama, en alakalı sitelerden en az alakalı sitelere doğru yapılır.

Dizine Ekleme (Indexing) Nedir ve Neden Önemlidir?

Dizine ekleme, arama motoru botlarının taradıkları web sitelerindeki verileri işleyip kaydetme ve bunları bir depolama sistemine yerleştirme sürecinin tamamıdır. Botlar, her taranan web sitesinin içeriğini analiz etmeye ve anlamaya çalışır. Analiz sırasında, anahtar kelimeler, görseller, içerik ve sitenin genel yapısı gibi öğeler sınıflandırılır. Analiz yoluyla elde edilen bilgiler dizine eklenir ve arama motorlarının veritabanında saklanır; kullanıcıya sunulmak üzere hazır hale gelir.

Web Sitesi Dizine Eklenmesi Neden Önemlidir?

Dizine eklenmemiş sayfalar, botlar tarafından SERP’te yer almaz çünkü veritabanlarında bulunmazlar. Bu nedenle organik trafik alamazlar. Bu yüzden SEO optimizasyonları sırasında, organik trafik kazanması beklenen sayfalar için dizine ekleme kritik öneme sahiptir.

Google’da Dizine Eklenen Sayfalar Nasıl Sorgulanır?

Google dizin sorgulaması olarak bilinen bir süreç, belirli bir web sitesinde dizine eklenen ve eklenmeyen sayfa sayılarını görmemizi sağlar. Dizine eklenen sayfa sayısını ve hangi sayfaların dizine eklendiğini kontrol etmenin iki farklı yöntemi vardır.

Google Kullanarak Dizin Sorgulama

Arama çubuğuna "site:example.com" (örnek olarak domain adı) yazarsak, Google tarafından dizine eklenen sayfa sayısını görebiliriz. SERP’te sonuç yoksa, bu durum dizine eklenen sayfa sayısının sıfır olduğunu gösterir.

Google Search Console Kullanarak Dizin Sorgulama

İlgili web sitesinin Google Search Console hesabına giriş yaptıktan sonra, "Dizin" bölümünün hemen altında bulunan "Kapsam (Coverage)" bölümüne tıklayabiliriz. Burada, "Geçerli (Valid)" bölümünün altındaki sayı, dizine eklenen sayfa sayısını gösterir. Detaylar bölümünden, dizine eklenen sayfalar hakkında daha ayrıntılı bilgi alınabilir. Eğer "Geçerli" bölümü sıfır gösteriyorsa, bu dizine eklenmiş sayfa olmadığını ifade eder. Dizine eklenen sayfalarda aldığımız hata sayıları "Hatalar (Errors)" bölümünde bulunurken, bu hatalar hakkında daha fazla bilgi "Detaylar (Details)" bölümünde yer alır.

Google Dizine Ekleme Talebi Nedir ve Nasıl Gönderilir?

Google’a site ekleme olarak da bilinen dizine ekleme talebi, Google’a web sitesi sayfalarınızı bildirmek ve dizine eklenmesini istemektir. Bu sayfaları Google’a göndermek, sayfaların hemen dizine ekleneceği veya SERP’te hemen üst sıralarda görüneceği anlamına gelmez. Dizine ekleme talepleri yalnızca, web sitesine eklenmiş yeni veya değiştirilmiş sayfalar hakkında Google’ı bilgilendirmek için yapılır. Sayfaların nasıl ve ne zaman dizine ekleneceği Google botlarının takdirindedir.

Google Search Console Kullanarak Dizin Talebi Gönderme

Google Dizine ekleme talebi göndermek için ilk adım, ilgili web sitesinin Google Search Console hesabına giriş yapmak ve seçilen sayfaların URL’lerini "URL Denetleme (URL Inspection)" bölümüne eklemektir. Birkaç saniye bekledikten sonra Search Console, Google dizin verilerini çeker ve söz konusu sayfaların mevcut dizin durumunu gösterir. Aynı ekranın sağ tarafında yer alan "DİZİNE EKLEMEYİ TALEP ET (REQUEST INDEXING)" bölümüne tıklamak, ilgili URL’ler için bir dizine ekleme talebi gönderir.

"Google Dizinden Sayfa Kaldırma" Nedir ve Nasıl Yapılır?

Google dizin sayfalarını silme olarak da bilinen bu işlem, Google’a belirli sayfaları bildirmek ve bunların dizinden kaldırılmasını istemektir. Google’a belirli sayfalar hakkında bilgi vermek, botların bu sayfalara öncelik vermesini sağlar; ancak sayfaların dizinden ne zaman ve nasıl kaldırılacağı yine Google botlarının takdirindedir.

Google Search Console Kullanarak Dizinden Sayfa Kaldırma

İlk adım, ilgili web sitesinin Google Search Console hesabına giriş yapmak ve "Dizin" bölümünün altında bulunan "Kaldırmalar (Removals)" bölümüne tıklamaktır. Daha sonra sayfanın sağ tarafında yer alan "YENİ TALEP (NEW REQUEST)" butonuna tıklayarak bir kaldırma talebi oluşturulur.

Sayfaların Dizin Durumu Nasıl ve Neden Değişir?

Bazen bir web sitesindeki her sayfanın dizine eklenmesini talep etmeyebilirsiniz. Sayfaların dizin durumlarını kontrol etme veya değiştirme ihtiyacının farklı nedenleri olabilir. Bunlar şunlardır;

- Dizine eklenmeye uygun olmayan sayfalar, tarama bütçesinin optimize edilmesi için hariç tutulabilir (örn: statik sayfalar)

- Hala test edilen ve özgün, kaliteli içerik sunmayan sayfalar, web sitesi otoritesini korumak ve kullanıcı erişimini engellemek amacıyla dizine eklenmeyebilir.

Böyle durumlarda, sayfaların dizin durumu arama motoru botları yönlendirilerek kontrol edilebilir.

Robots Meta Direktifleri Nedir ve Kullanılabilir mi?

Robots Meta Direktifleri, web sitesindeki sayfaların dizin durumunu kontrol etmek için botlara atanan kodlardır. Robots Meta Direktifleri, Robots Meta Etiketleri ve X-Robots Etiketleri olmak üzere ikiye ayrılır.

Robots Meta Etiketleri

Robots Meta Etiketleri, sayfaların HTML bölümlerine yazılan ve bazı veya tüm tarayıcıları yönlendirebilen kodlardır. En yaygın Robots Meta Etiket türleri index/noindex, follow/nofollow ve noarchive’dir.

Index / Noindex etiketleri, arama motoru botlarına sayfaları dizine ekleyip eklememeleri gerektiğini bildirir. Index etiketi sayfaların dizine eklenmesini ve SERP’te gösterilmesini sağlarken, noindex etiketi sayfaların dizine eklenmemesi ve SERP’te görünmemesi talimatını verir.

Noindex terimi belirtilmediği sürece, arama motorları tüm sayfaları dizine ekleme yönünde hareket eder. Bu nedenle, index teriminin belirtilmesi gereksizdir.

X-Robots Etiketleri

X-Robots Etiketleri, HTTPS üzerinden script bölümünün bir parçası olarak kullanılır. Gönderilen talimatlar, Robots Meta Etiketleri ile aynıdır; sadece alternatif bir yöntem sunar.

Arama Motorları Neden Dizine Eklenmiş Sayfaları Kaldırır?

Botlar tarafından dizine eklenen sayfalar, site yöneticilerinin müdahalesi olmadan dizinden kaldırılabilir (örn: site yöneticilerinin "noindex" meta etiketi kullanarak botlara talimat vermesi). Arama motorlarının dizinden sayfa kaldırmasının nedenleri şunlar olabilir:

- İlgili sayfalarda 4XX kodlu istemci hataları veya 5XX kodlu sunucu hataları alınması

- Arama motorlarının kullanım şartlarını ihlal etmek

- İlgili sayfalara erişim izni gerekmek ve bu sayfaların herkes tarafından erişilebilir olmaması

Canonical Etiketler Nedir ve Nasıl Kullanılır?

Canonical Etiketler, botlara ilgili sayfaların belirli bir sürümü tercih edip etmediğini bildirir. Bir sayfa canonical etiketi içeriyorsa, botlar seçilen sayfanın daha tercih edilen alternatif bir sürümü olduğunu varsayar ve canonical etikette yer alan URL, yetkili sayfa olarak görülür. Ancak sayfa canonical etiketi içermiyorsa, botlar sayfanın alternatif sürümü olmadığını varsayar ve sayfayı orijinal olarak dizine ekler.

Canonical etiketler, orijinal sayfaların değerini alternatif sürümlere kaybetmesini önler. Burada dikkat edilmesi gereken nokta, canonical etiketlerin doğrudan sayfaların dizin durumuna müdahale etmediğini bilmektir. Sayfaların dizin durumuna müdahale etmek için index/noindex meta etiketleri kullanılmalıdır.

Canonical Etiketler Ne Zaman Kullanılır?

- Bir sayfa, filtreleme, sıralama gibi öğeler içerdiğinde, parametreli URL’leri parametresiz sürümlere yönlendirmek için canonical etiketleri kullanılır.

- Canonical etiketleri, sayfaların benzer sürümlerinin oluşturabileceği çoğaltılmış içerik sorunlarını önlemek için sağlanmalıdır.

- Canonical etiketler, bir web sitesindeki mevcut orijinal sayfalar hakkında botları bilgilendirmek amacıyla her orijinal sayfada kullanılmalıdır.

Dizine Ekleme Nasıl Optimize Edilir?

Google dizine ekleme optimizasyonu, tarama bütçesinin iyileştirilmesine katkı sağlar. Bu nedenle dizine ekleme optimizasyonu, SEO operasyonları için çok önemlidir. Dizine ekleme optimizasyonu sırasında, aşağıdaki maddelere uyulduğundan emin olunmalıdır;

- Doğru robots.txt dosyasının kullanılması (örn: Organik trafik kazanması hedeflenen sayfalara "disallow" komutu yerleştirmek hatalı bir kullanımdır çünkü engellenen sayfalar taranamaz ve dizine eklenmez.)

- Web sitesi için doğru ve düzenli bir site içi bağlantı mimarisine sahip olmak

- Backlink analizinin yapılması

- Sitemap kullanmak

- Robots meta etiketleri ve canonical etiketlerin doğru kullanılması

- Web sitesinin mobil uyumlu olması

- Kullanıcılara ve dolaylı olarak botlara güncel, kaliteli ve özgün içerik sunmak. SEO dostu içerik hazırlamanın nasıl yapıldığını ilgili blog yazımızdan öğrenebilirsiniz.

SEO ve dijital pazarlama süreçleri hakkında daha fazla bilgi edinmek için AnalyticaHouse blog sayfasını ziyaret edebilir veya doğrudan bizimle iletişime geçebilirsiniz.

Kaynaklar

- https://developers.google.com/search/docs/advanced/robots/robots_meta_tag

- https://developers.google.com/search/docs/advanced/crawling/overview?hl=tr

- https://www.linkbuildinghq.com/crawling-indexing-ranking/

- https://moz.com/beginners-guide-to-seo/how-search-engines-operate

- https://www.onely.com/blog/how-to-create-an-indexing-strategy-for-your-website/

- https://www.searchenginejournal.com/11-seo-tips-tricks-to-improve-indexation/288521/#close

- https://www.semrush.com/blog/google-index/

- https://www.semrush.com/blog/what-are-crawlability-and-indexability-of-a-website/

- https://seranking.com/blog/canonical-tag-guide/

- https://www.vargonen.com/blog/canonical-url-nedir/

- https://moz.com/learn/seo/robots-meta-directives

More resources

Finans Sektöründe Generative Engine Optimizasyonu (GEO): YMYL Riskleri ve Güven Sinyalleri

Yapay zeka teknolojilerinin arama motoru ekosistemine entegre olmasıyla birlikte, geleneksel SEO (Ar...

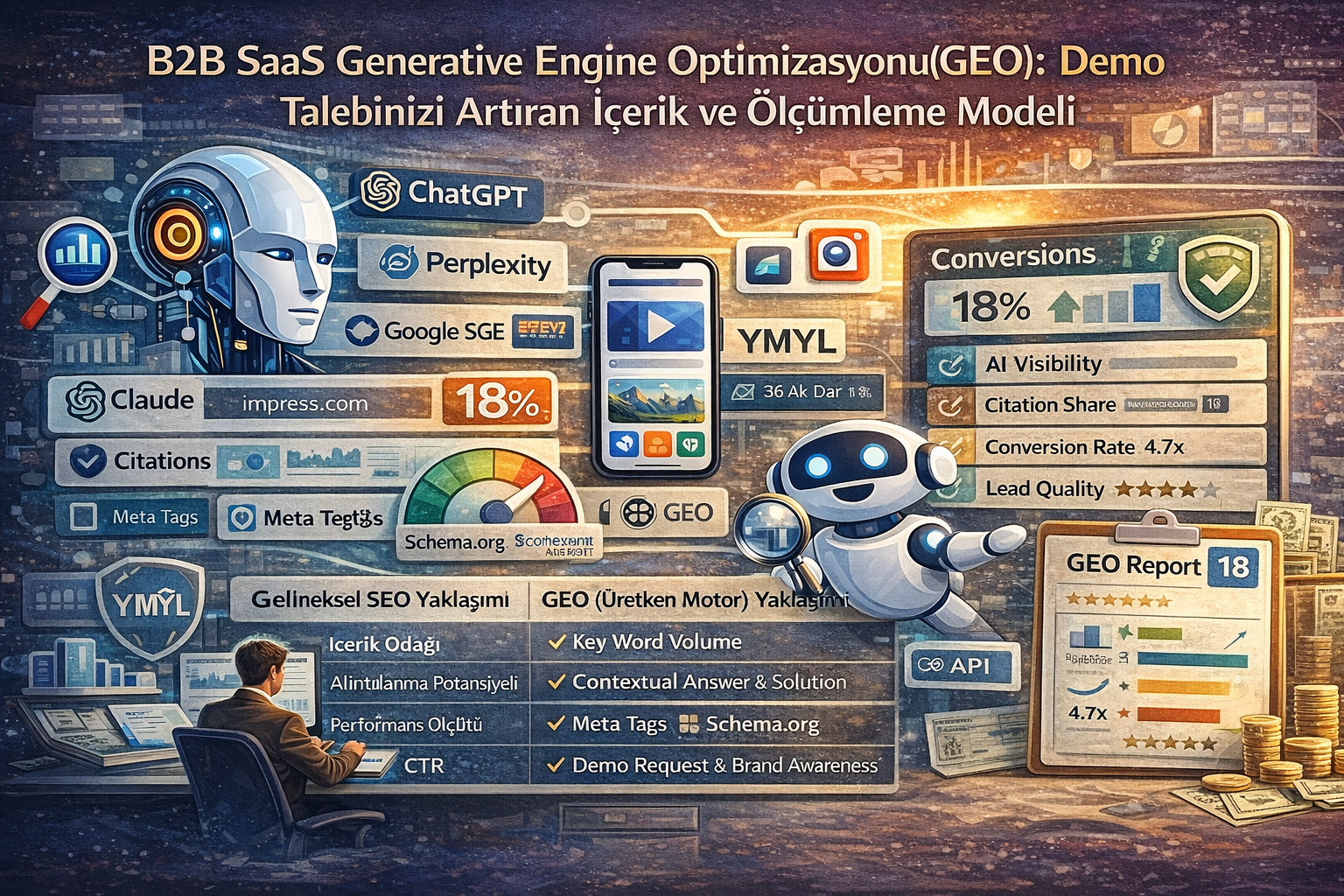

B2B SaaS Generative Engine Optimizasyonu (GEO): Demo Talebinizi Artıran İçerik ve Ölçümleme Modeli

Dijital pazarlama dünyası, geleneksel arama motoru optimizasyonundan (SEO), yapay zeka odaklı arama...

Source Term Vector Nedir?

Source Term Vector, bir web sitesinin zaman içinde ürettiği içerikler, bu içeriklerin eşleştiği sorg...