Burcu Aydoğdu

Jun 12, 2025Proactive SEO: Reporting and Analysis with Screaming Frog Scheduling

Manually conducted technical SEO audits, especially for large-scale websites, often turn into repetitive and time-consuming processes that can take hours or even days. Spending this valuable time on data gathering instead of analysis and strategy development decreases overall efficiency. At this point, automation becomes one of modern SEO’s strongest allies, enabling a shift toward proactive site health management. It helps you discover which pages to prioritize.

This is where Screaming Frog's scheduling feature steps in as a true lifesaver—allowing you to replace exhausting manual audits with an automated system that keeps track of your website’s technical health 24/7.

What is Screaming Frog Scheduling and Why is it a Game-Changer?

Screaming Frog Scheduling is a feature available in the paid version of the SEO Spider tool that allows you to automatically run pre-configured crawl settings at your specified times and frequencies. In short, even if you're not at your computer, Screaming Frog can crawl your website on your behalf.

The core purpose of this feature is to automate repetitive auditing tasks, eliminate human error, standardize processes, and give you back your most valuable resource—time.

- First, it eliminates the weekly hours spent on manual crawls, giving you extra time for strategy development and data analysis.

- Second, since each crawl is run with the same saved configuration file, your data is 100% consistent. This makes it easy to track changes over time (such as increasing 404 errors or broken redirect chains).

- Finally, and most critically, it provides real-time detection—allowing you to prevent a potential organic traffic disaster before it even begins.

Step-by-Step Guide to Setting Up Scheduled Crawls

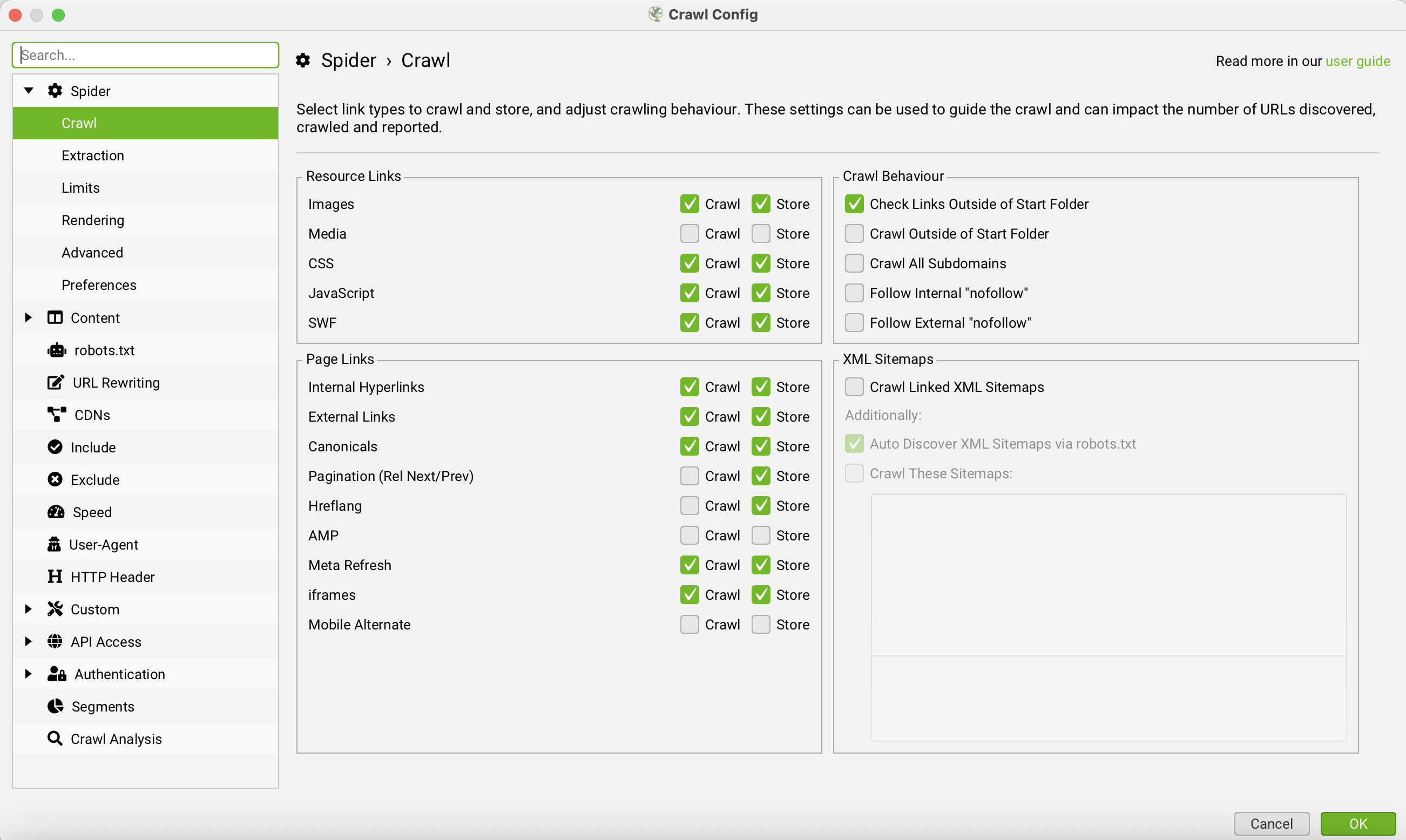

Step 1: Save Your Crawl Configuration Template:Start by saving the settings of the crawl you want to automate. Configure crawl settings such as the crawl mode, tags and links to be included, crawl depth, and elements to exclude.

Step 2: Integrate Google Analytics 4 and Google Search Console APIs:

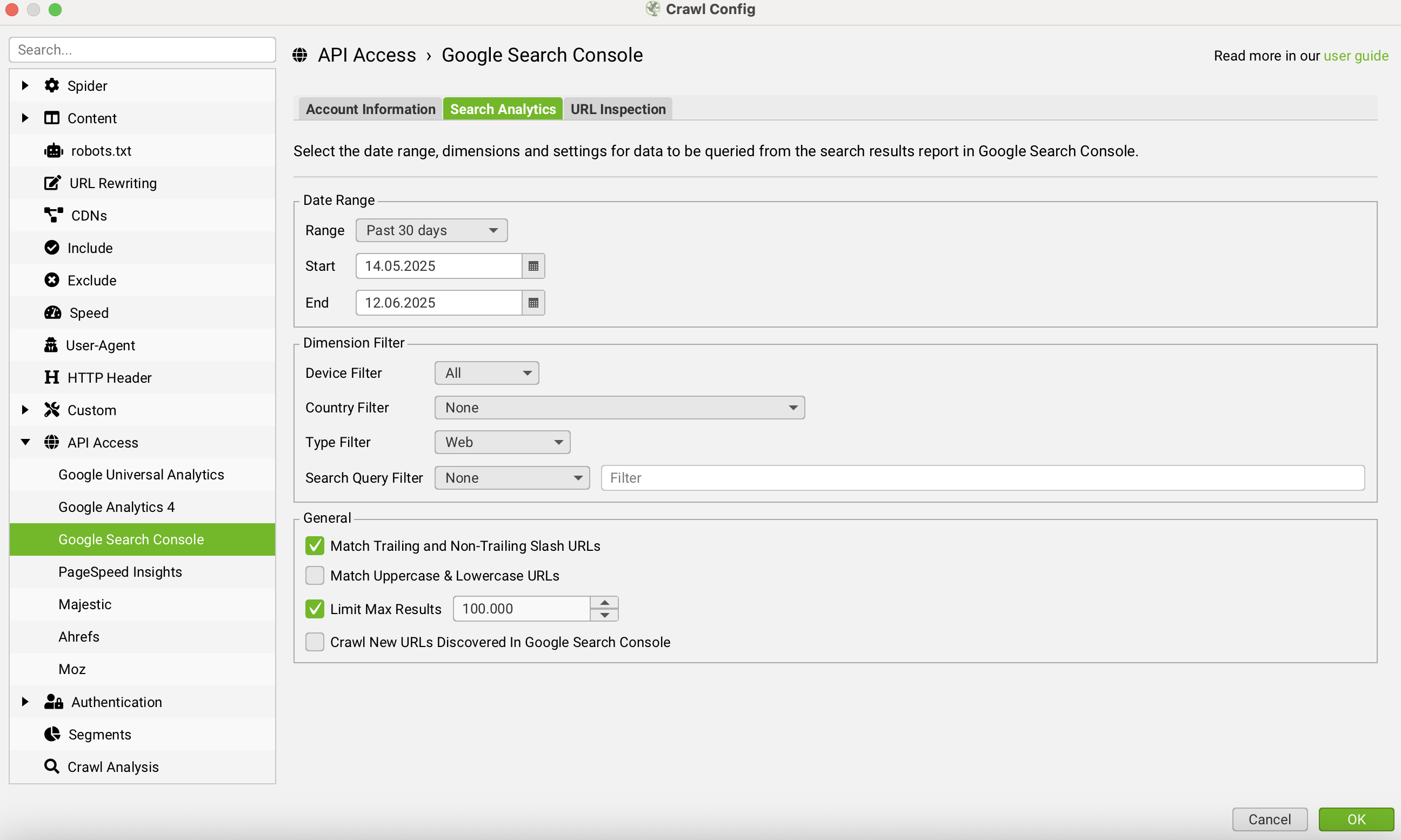

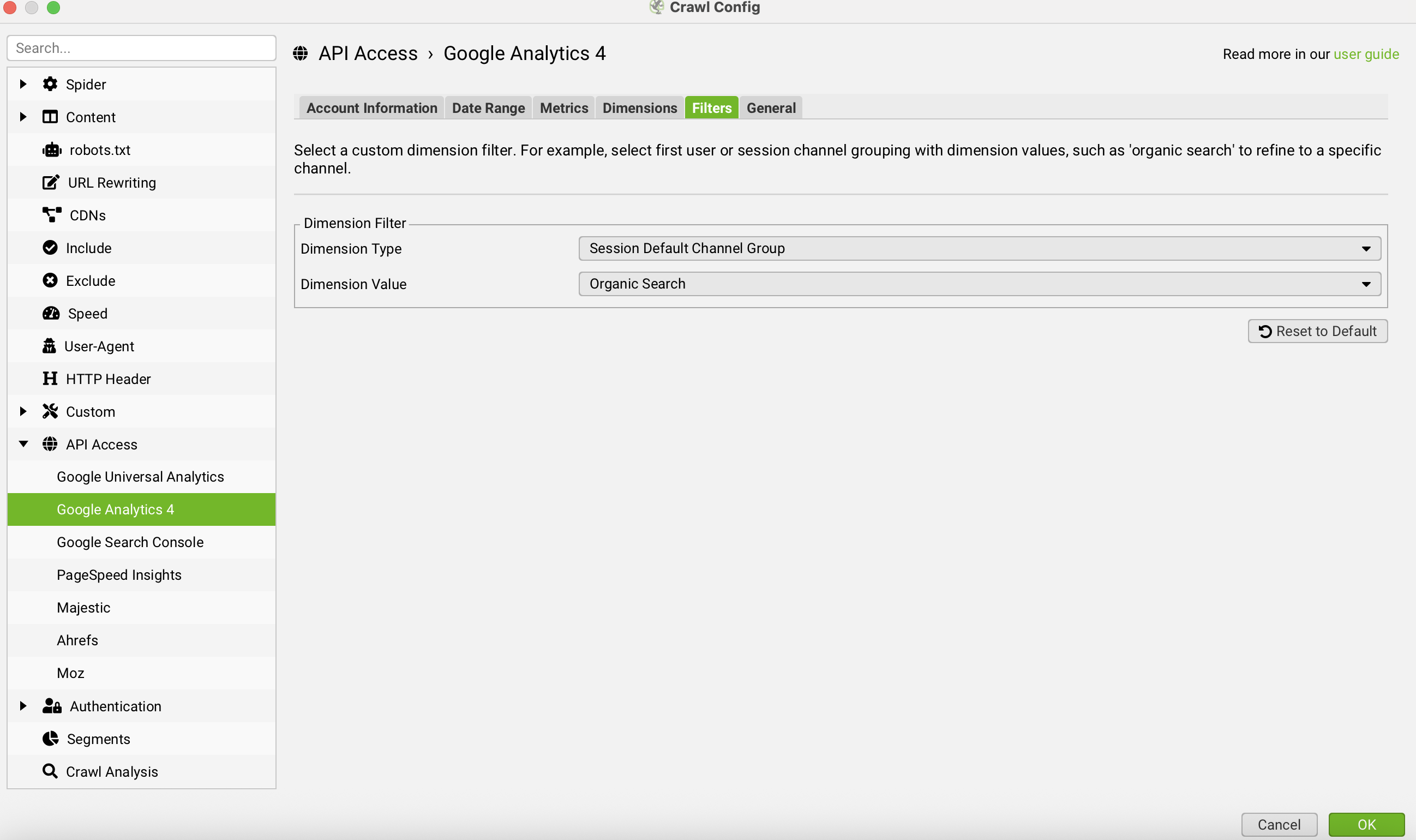

Under Configuration > API Access, integrate Google Analytics 4 and Google Search Console to enrich crawl data with real-time Google performance metrics tied to each URL.

Once done, save this configuration via File > Configuration > Save As into a .seospiderconfig file. This file will serve as the blueprint for all future automated crawls.

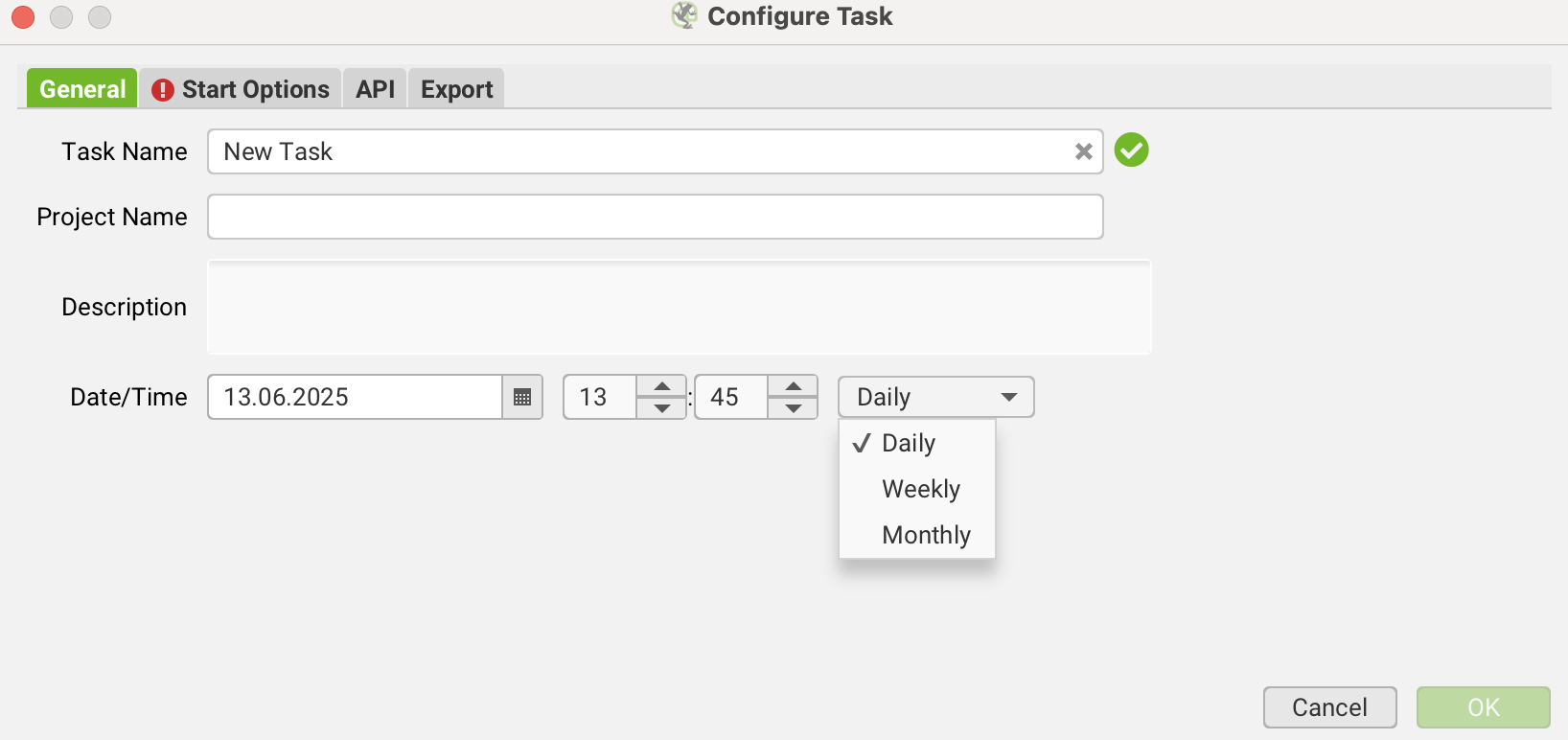

Step 3: Create a Scheduled Task:

Under File > Scheduling, click the Add button to create a new task. Use the Date/Time field to set how frequently the crawl should run—options include Daily, Weekly, and Monthly.

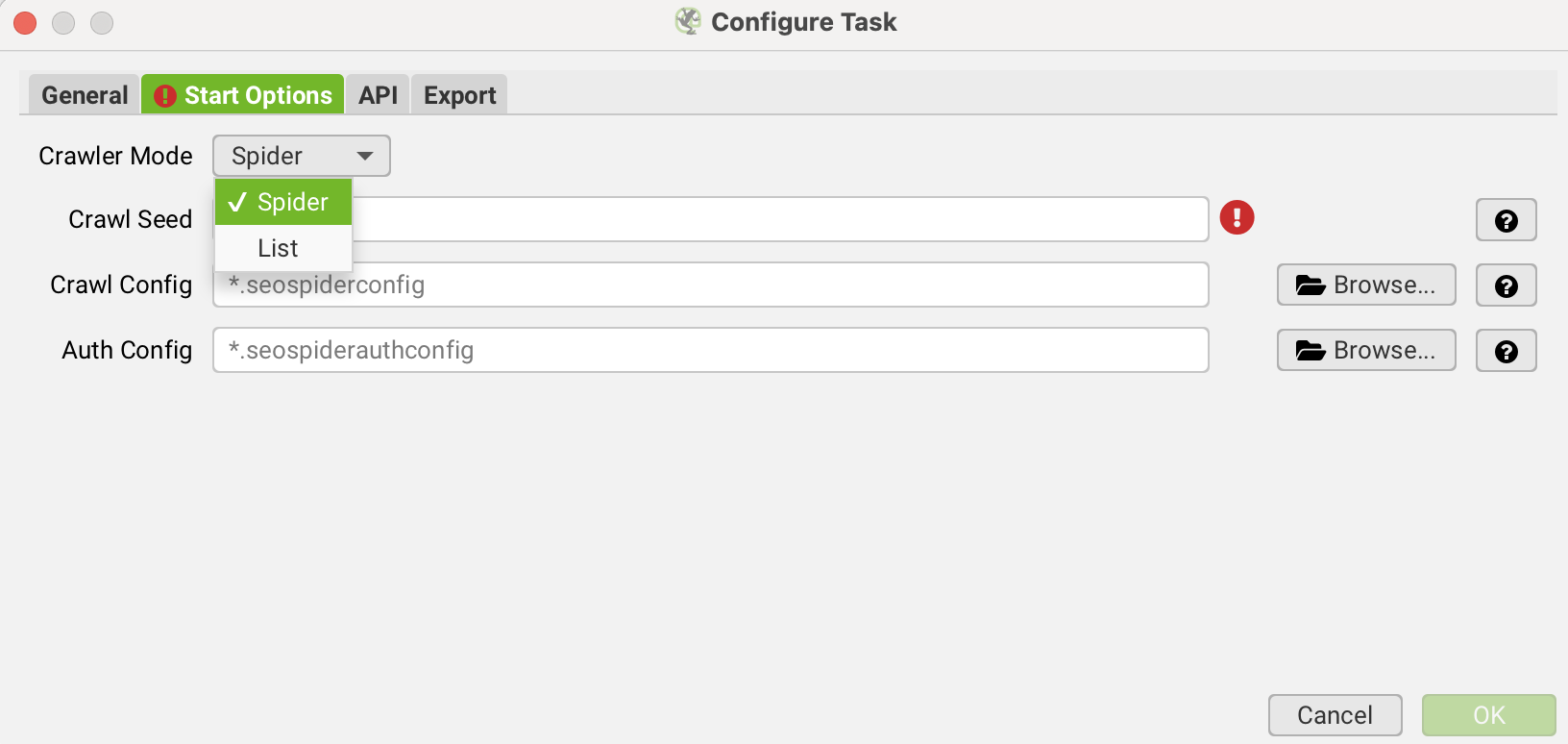

Step 4: Choose the Crawl Mode:

You can opt for Standard or List Mode. In List Mode, you can crawl a specific list of URLs using a locally stored .xlsx file.

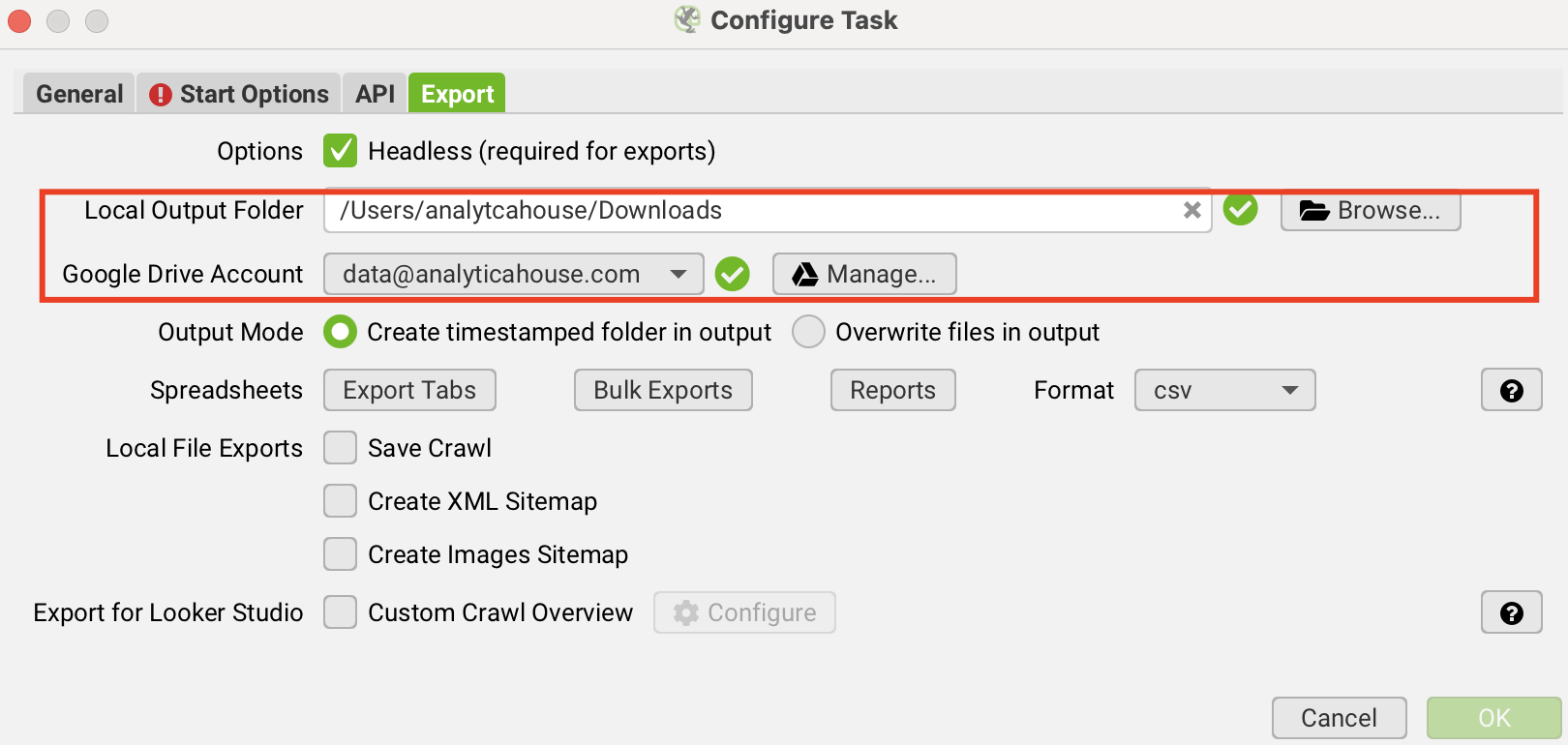

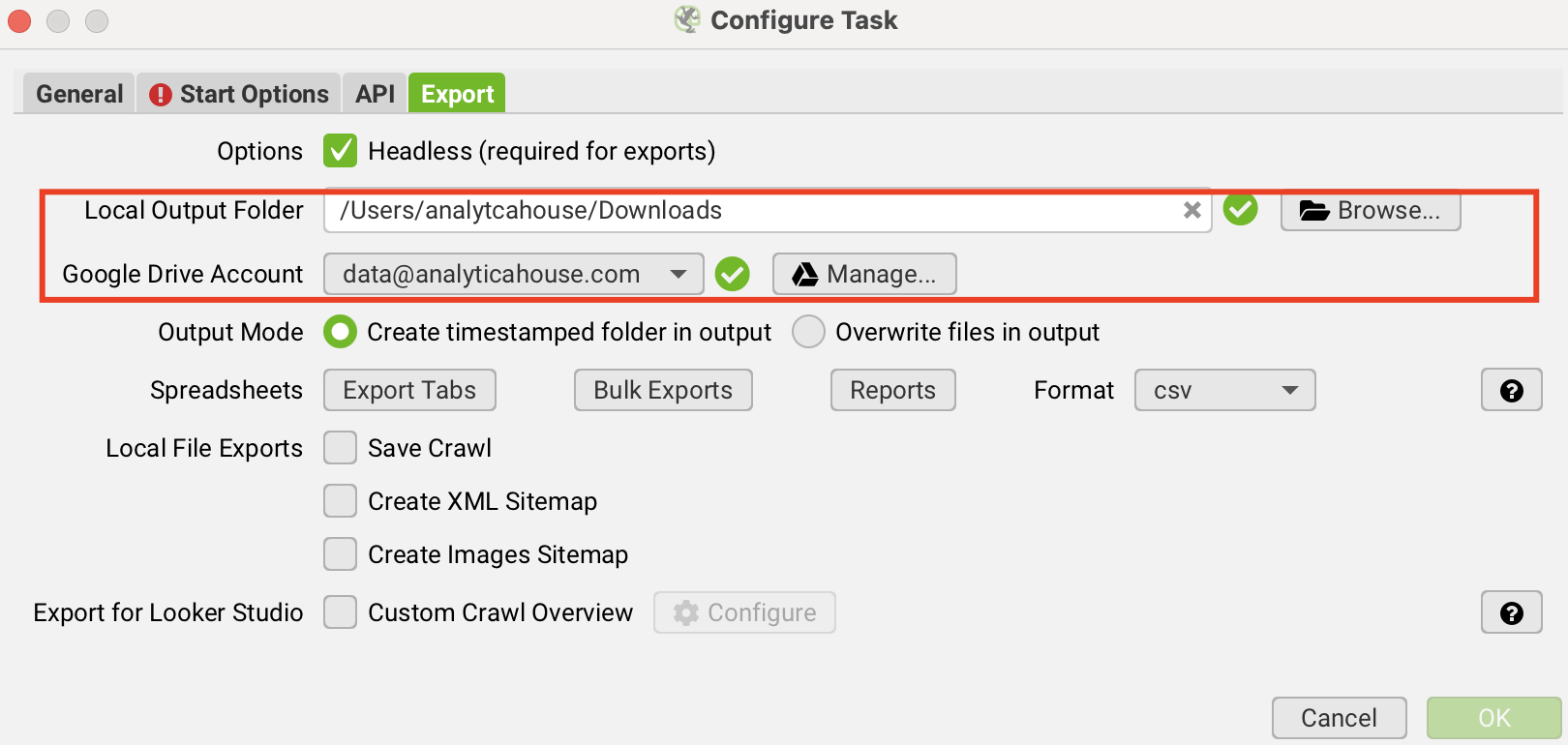

Step 5: Define the Output Location:

Decide where the crawl output should be saved—either locally or to your connected Google Drive account.

Step 6: Export to Google Drive or Google Sheets:

To export reports directly to Google Drive or Sheets, simply link your Google account under the Google Drive Account menu.

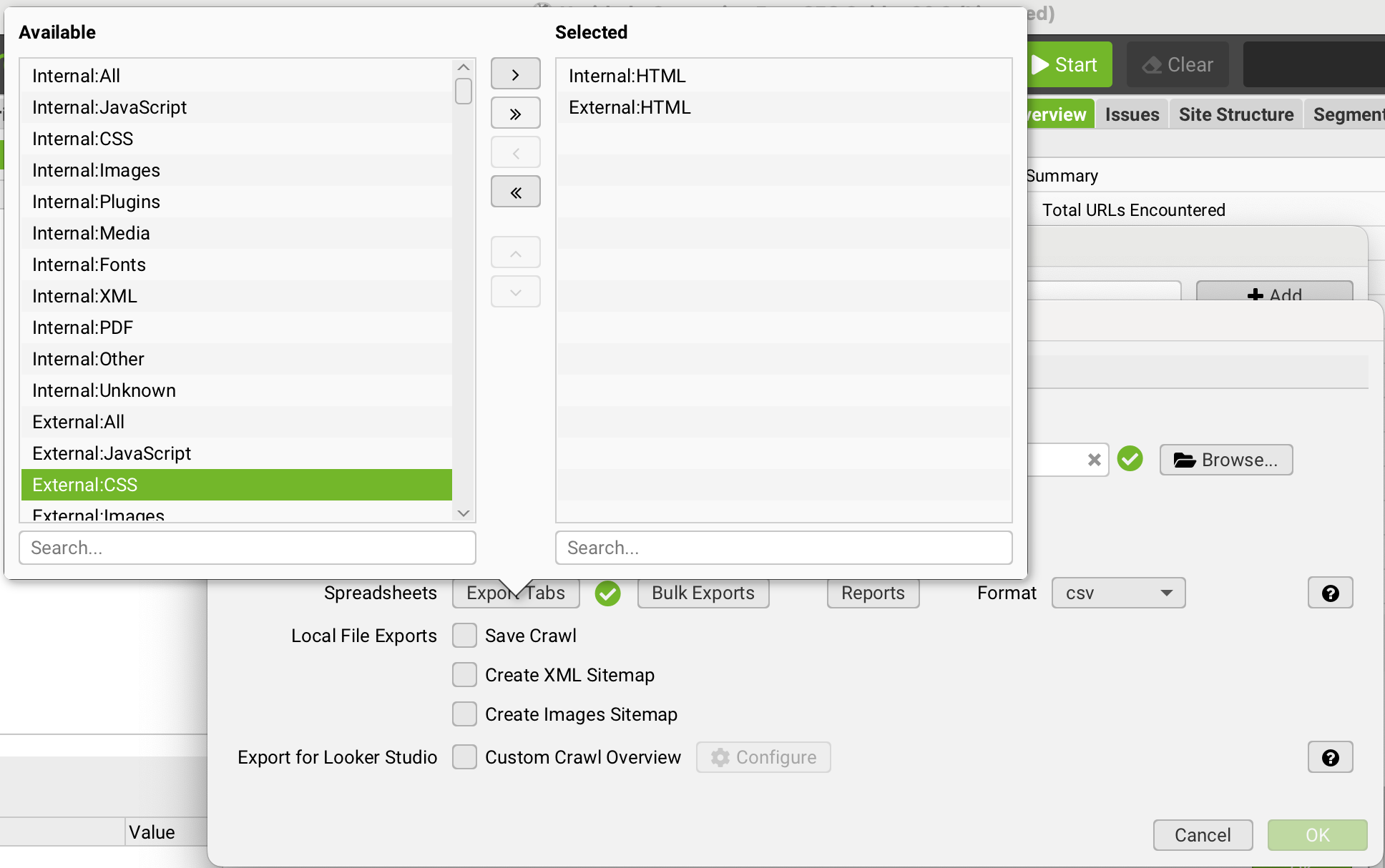

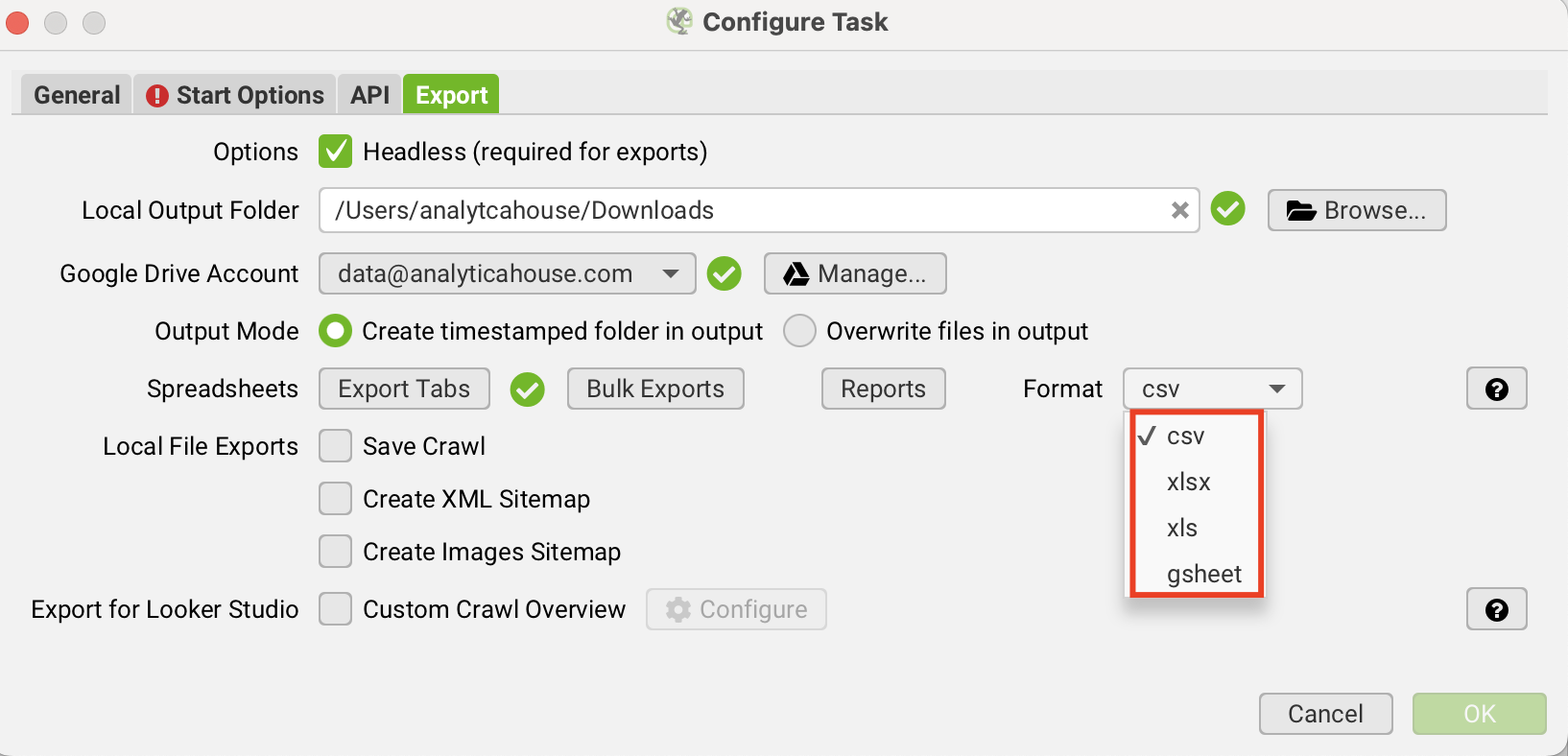

Step 7: Select Reports and Export Settings:

In the Reports and Exports tabs, select which predefined reports (e.g., Redirects, Title Errors, etc.) should be automatically generated and saved after each crawl.

Step 8: Choose Export Format:

Specify the format in which you want the data to be saved (e.g., CSV, XLSX).

Optional: Integrate with Looker Studio to visualize and analyze your exports more interactively.

Data Enrichment: Google Search Console & GA4 API Integration

The technical insights Screaming Frog provides (URL structure, HTTP status codes, meta tags, etc.) are extremely valuable, but they only tell half the story. The other half is how users interact with those pages and how Google evaluates them in SERPs.

That’s where Google Search Console (GSC) and Google Analytics 4 (GA4) API integration becomes crucial. This integration merges technical data with performance and behavioral metrics—empowering you to move from basic queries like:

“Which pages have titles over 60 characters?” to “Which pages have high impressions, low CTR, and also a poor Largest Contentful Paint (LCP) score?”

To enable this, API authorization must be completed for the relevant sources in Screaming Frog. Once integrated and configured, save your settings as a .seospiderconfig file or upload it to your Drive account.

When you assign this configuration to your scheduled task, Screaming Frog will fetch real-time data—clicks, impressions, CTR, average position, users, sessions, conversions, etc.—from GSC and GA4 for each crawl. This allows you to prioritize technical SEO tasks based on actual business impact.

Automated Reporting: Advanced Export Settings

Screaming Frog’s scheduling feature lets you fully automate your reporting process. Instead of dealing with dozens of export files after every crawl, you can predefine which specific reports should be automatically created.

For example, for a weekly “Health Crawling Check,” you might only export:

- 4xx Error Pages

- Redirect Chains

- Canonical Issues

- Non-Indexable Pages

AnalyticaHouse Pro-Tip: Practical Use Cases for Scheduled Crawls

The key to maximizing the benefits of scheduled crawls is to apply them in the right scenarios. At AnalyticaHouse, we frequently use scheduled crawls for:

Weekly Crawling Check

Monitor critical SEO issues weekly:

- 5xx Server Errors

- New 4xx Pages

- Indexability Issues: Detect mistakenly noindexed or robots.txt-blocked pages

- Canonical Tag Errors: Identify duplicate content issues due to incorrect canonicalization

Competitor Analysis

Set up weekly or monthly crawls of competitors to automatically monitor their strategic moves:

- Changes in site architecture or URL hierarchy

- New meta titles and descriptions reflecting targeted keywords

- Newly added content sections or blog posts

- Implementation of new structured data types (Schema)

Post-Migration Checks

After a site migration, it’s vital to ensure that 301 redirects from old to new URLs work correctly. Manually checking thousands of URLs is unrealistic.

Instead, schedule a List Mode crawl using a list of old URLs. This ensures every legacy URL redirects properly. If a redirect breaks (e.g., leads to a 404), you'll be alerted immediately.

Centralizing All SEO Data in Google BigQuery

The main objective here is to build a scalable and queryable data warehouse that combines data from Screaming Frog, GA4, and GSC. This allows you to build fully automated, detailed, and customizable reports using tools like Looker Studio or Google Sheets.

Key Components:

- Schedule Timing: Customize frequency and timing of crawls

- GA4 & GSC Integration: Real-time Google metrics per URL

- BigQuery & URL Breakdown: Store and categorize URLs by path type (e.g., /blog, /category, /product)

- Metric Selection: Choose which performance metrics to pull (e.g., GA4 Sessions, Purchases, Revenue, Conversion Rate; GSC Impressions, Clicks, CTR, Average Position)

- Functional Reporting: Upload bulk URL lists to monitor their performance over time

- Looker Studio & Sheets Integration: Use the BigQuery table to create interactive dashboards and automated reports updated in real time

More resources

Generative Engine Optimization (GEO) in E-commerce: How Do You Move Product Categories into AI Answers?

The digital marketing world has entered a new era as AI technologies fundamentally transform the sea...

How to Measure Generative Engine Optimization (GEO)? KPIs and Reporting Model for AI Visibility

The digital marketing world is undergoing a major transformation from search engine optimization tow...

Strategic ASO and Store Discovery Dynamics Analysis in the 2026 Mobile App Ecosystem

Mobile app marketing, as of 2026, has evolved beyond traditional optimization techniques into a stra...