Analytica House

Eyl 5, 2022Screaming Frog Kullanım Kılavuzu ve Yapılandırma Ayarları

Eğer SEO uzmanları tarafından en sık kullanılan araçlardan biri olan Screaming Frog’u kullanmaya başlamadan önce neler yapabileceğinizi öğrenmek istiyorsanız, bu içerik tam size göre.

Screaming Frog Nedir?

Screaming Frog, arama motorlarını taklit ederek sitenizi tarayan ve SEO açısından önemli metrikleri listeleyerek sitenizin eksikliklerini görmenizi sağlayan bir araçtır. 2010 yılında Dan Sharp tarafından kurulmuştur. Rakiplerinden en önemli farkı bulut tabanlı değil, Java tabanlı olmasıdır ve bilgisayarınıza indirip kullanabilmenizdir.

Screaming Frog’un ücretsiz ve premium sürümleri bulunmaktadır. Ücretsiz sürümde 500 URL ile sınırlıyken, ücretli sürümde sınırsız tarama imkânı sunmaktadır. https://www.screamingfrog.co.uk/seo-spider/#download. adresinden indirerek kullanmaya başlayabilirsiniz.

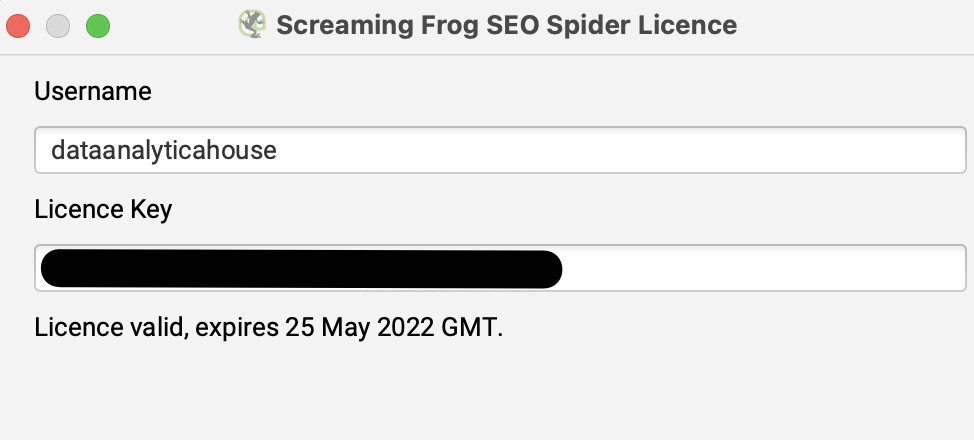

Screaming Frog Lisansı Alma ve Aktifleştirme

Ücretsiz sürüm yerine premium sürümü kullanmak isterseniz, https://www.screamingfrog.co.uk/seo-spider/licence/ adresinden lisans satın almalı ve indirdiğiniz araca bu lisansı girmelisiniz. Kullanıcı adı ve lisans numarasını girdikten sonra programı kapatıp açarak Screaming Frog’u kullanmaya başlayabilirsiniz.

Ayarlar ve Konfigürasyon Seçenekleri

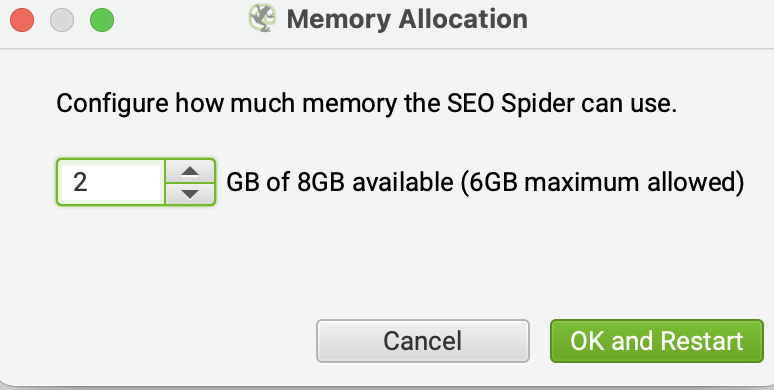

Bellek Tahsisi: Screaming Frog’da başlangıç ayarı olarak 32-bit makinelerde 1GB, 64-bit makinelerde ise 2GB bellek açılır. Buradaki RAM miktarını özelleştirip artırmanız, RAM modundayken daha fazla URL taramanıza olanak sağlar.

Sayfa sayısı fazla olan web sitelerini tararken bu ayarı manuel olarak artırabilir, belleği yüksek bilgisayarlarda taramanızı daha kısa sürede tamamlayabilirsiniz.

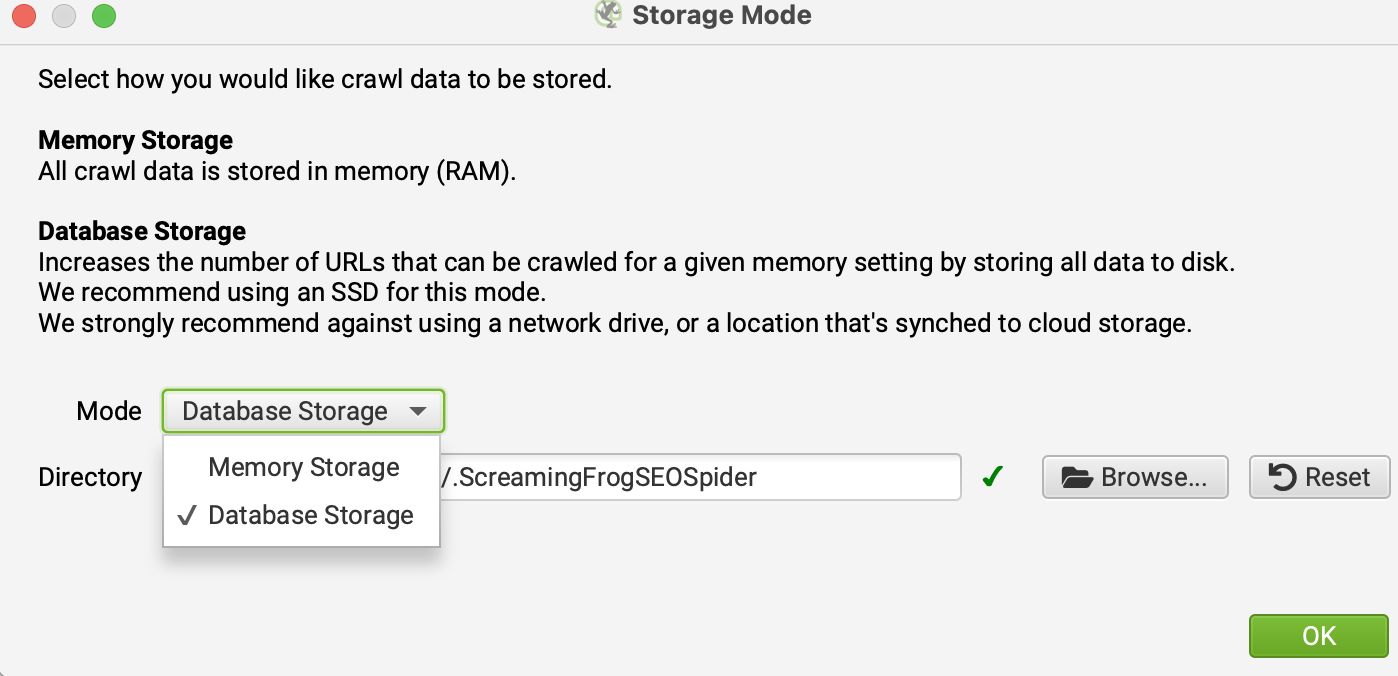

Depolama Modu: Taramış olduğunuz verilerin nerede depolanacağı ve işleneceği ile ilgili bu bölümde Bellek Depolama (Memory Storage) ve Veritabanı Depolama (Database Storage) olmak üzere iki seçenek vardır. Bellek Depolama seçildiğinde tüm veriler RAM’de tutulurken, Veritabanı Depolama seçildiğinde HDD/SSD üzerinde depolanır.

Bellek Depolama Modu, daha az URL’ye sahip siteler ve yüksek RAM’li bilgisayarlar için önerilmektedir.

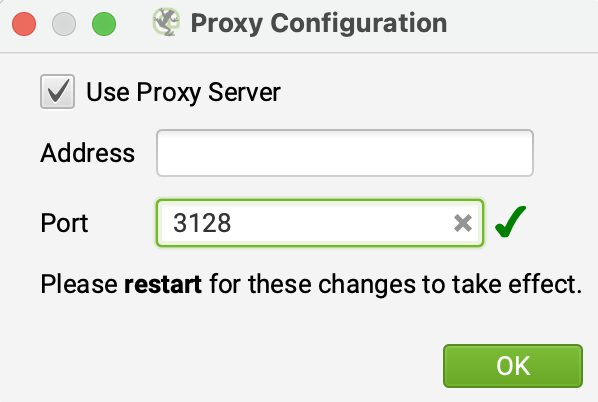

Proxy Ayarları: Proxy kullanmak isterseniz bu bölümden ayarlamalar yapabilirsiniz.

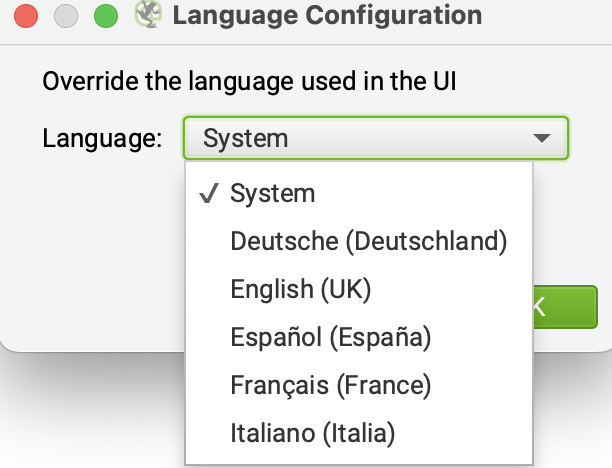

Dil Ayarları: Aracı hangi dilde kullanmak istediğinizi buradan seçebilirsiniz.

Screaming Frog Mod Ayarları

Spider: Bu ayarda Screaming Frog botları, başladığınız sitedeki tüm URL’leri keşfedene kadar taramaya devam eder.

List: Bu mod, taramak istediğiniz URL’leri manuel olarak belirtmenize imkân tanır. Sadece seçtiğiniz URL’leri tarar.

SERP: Taradığınız sayfaların meta başlık ve açıklama etiketlerinin arama sonuçlarında nasıl görüneceğine dair önizleme sağlar.

Compare Mode: Bu mod, yeni taramanızı bir önceki tarama ile karşılaştırarak oluşturur. Böylece hangi sorunların çözüldüğünü daha kolay görebilirsiniz.

Screaming Frog Konfigürasyon Ayarları

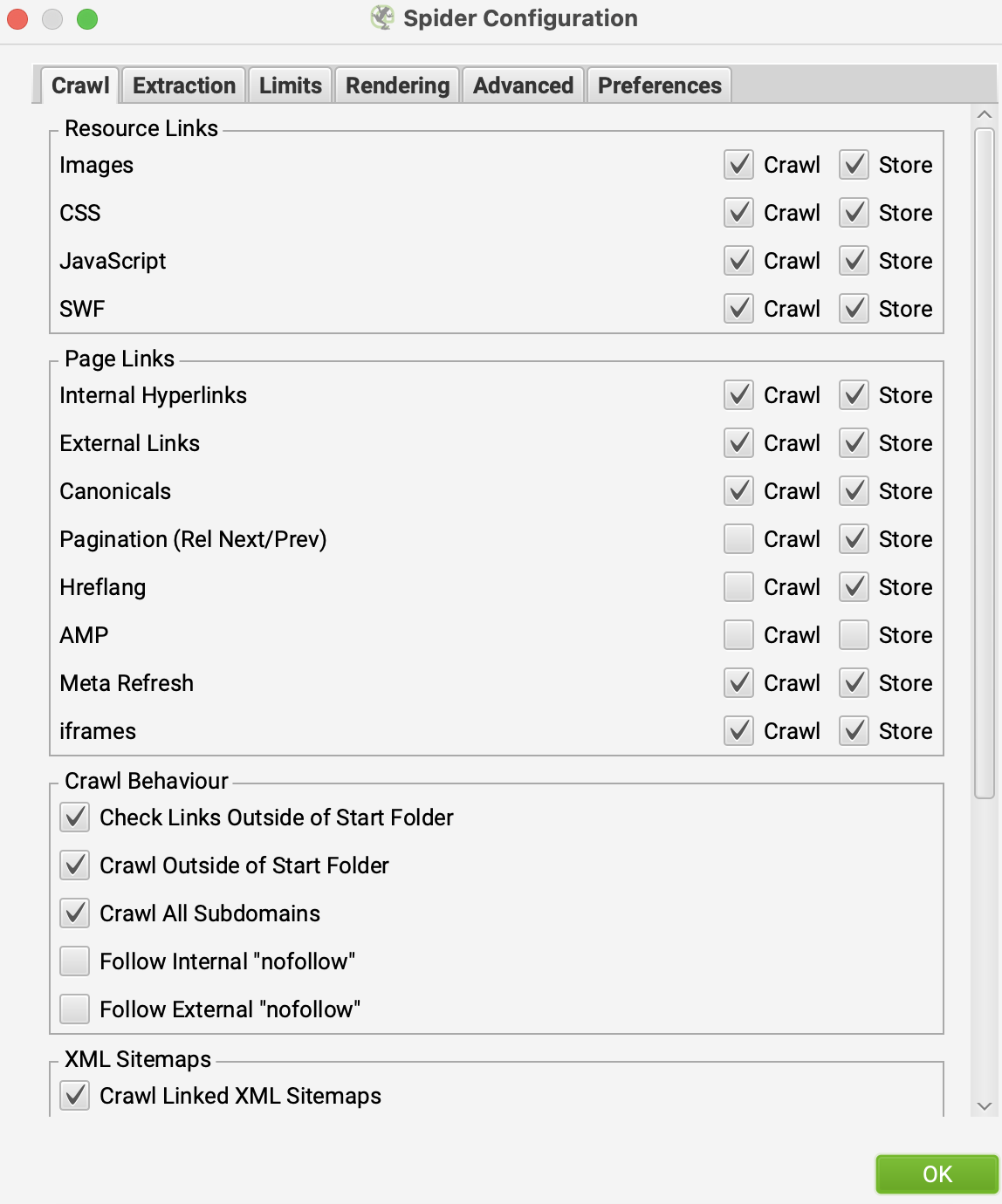

Spider: Tarama başlamadan önce dahil etmek veya hariç bırakmak istediğiniz alanları seçebilirsiniz. Extraction alanıyla hariç tutmak istediklerinizi belirtebilirsiniz. Limits kısmında taramanızın sınırlarını veya derinliğini ayarlayabilirsiniz. Rendering kısmında JS tabanlı sitelerde verilerin doğru görünmesi için render seçeneğini değiştirebilirsiniz. Advanced bölümünde noindex, canonical, next/prev gibi etiketlerin dikkate alınıp alınmayacağını belirtebilirsiniz. Preferences bölümünde meta etiketlerinizin piksel sınırlarını değiştirebilirsiniz.

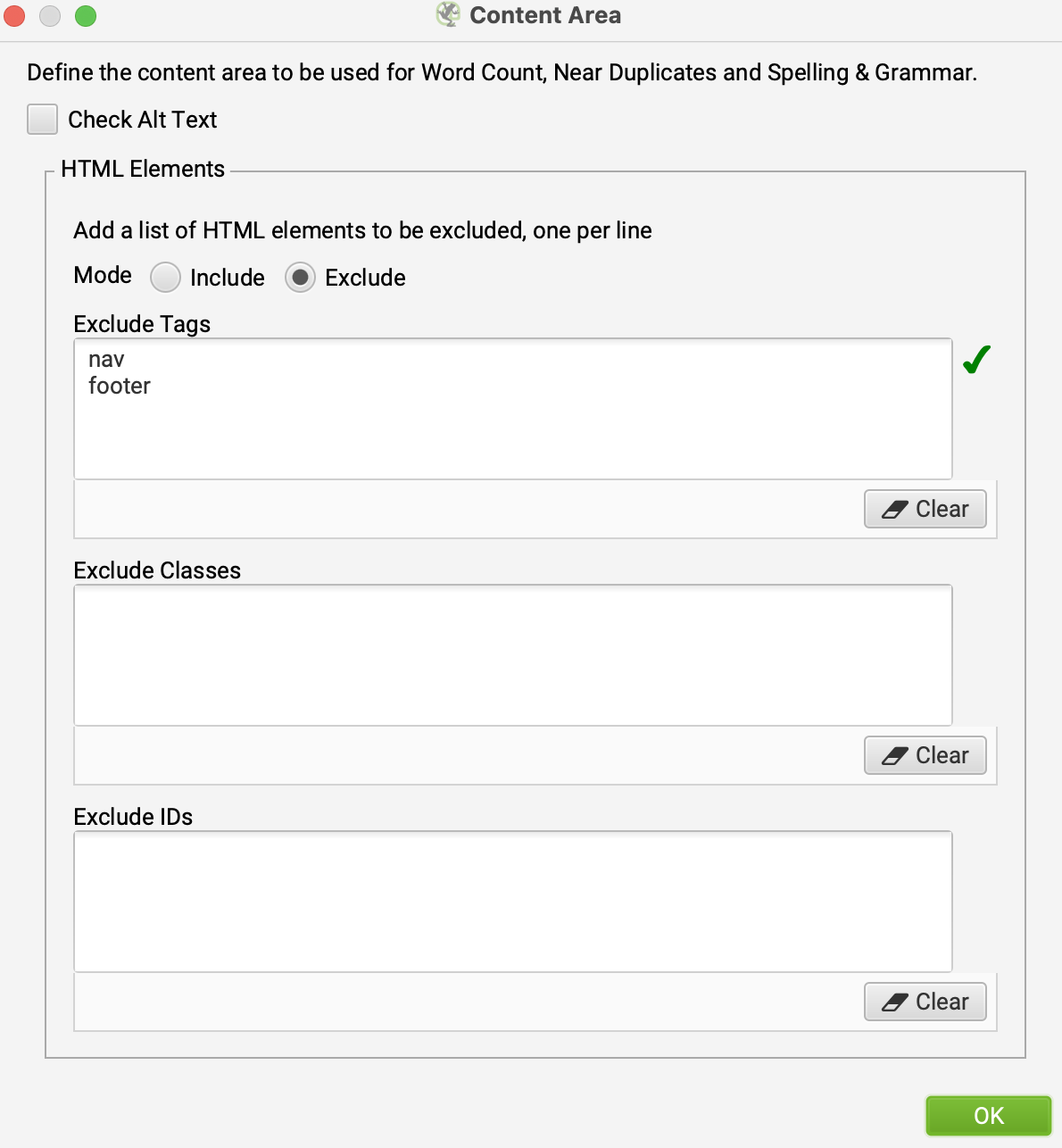

İçerik Alanı: Bu alanda tarayacağınız sitedeki içeriklerin analizini ve dilbilgisi uyumunu kontrol edebilirsiniz. Screaming Frog, bir sayfanın gövde (body) alanındaki içeriği dikkate alır. HTML5 semantik etiketleriyle oluşturulmamış sitelerde daha doğru yorumlar yapılabilmesi için özelleştirmeler önerilir.

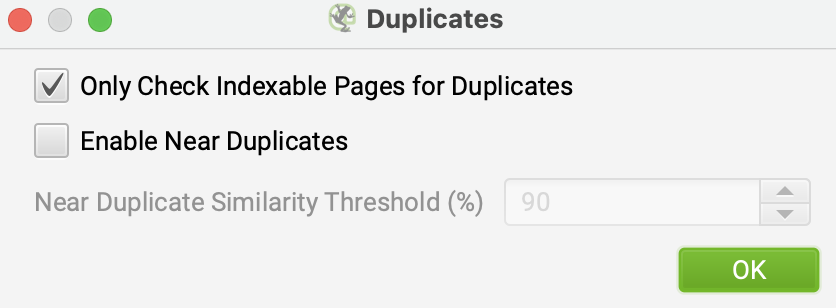

İçerik Kopyaları: Sitenizdeki içeriklerin özgünlüğünü test etmek için buradan özelleştirilmiş ayarlar yapabilirsiniz.

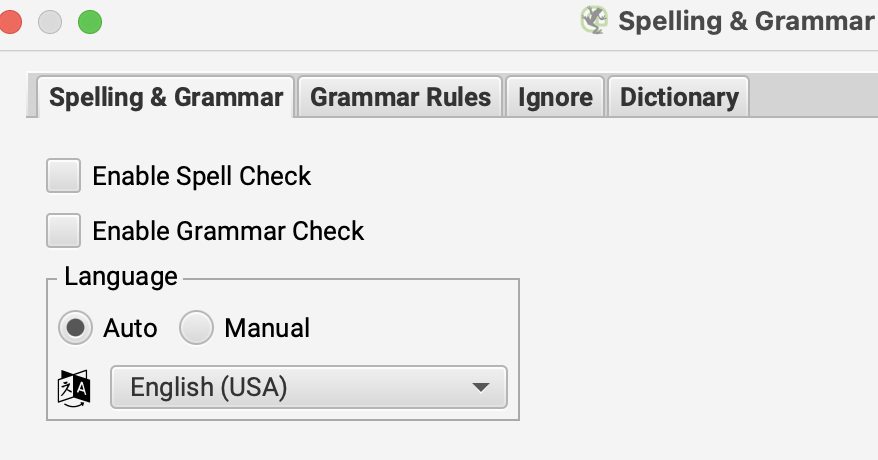

Yazım ve Dilbilgisi: Yazım ve dilbilgisi hatalarının tespit edilmesi için “Spelling” ve “Grammar” seçeneklerinin aktif olması gerekir. Bu sayede bu kontrolleri de sağlamış olursunuz.

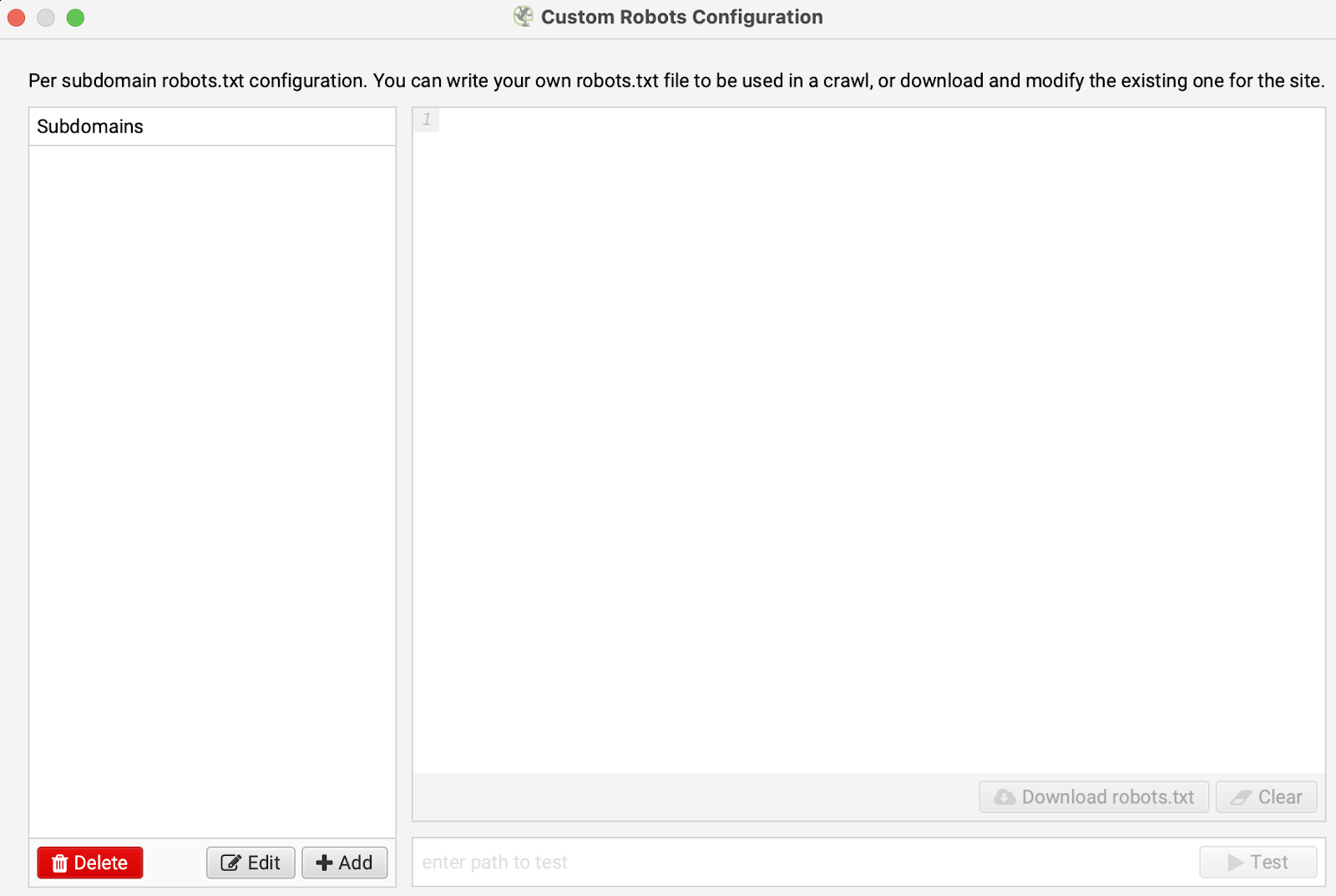

Robots.txt: Bu taramada, robots.txt dosyasındaki komutları dikkate almadan site taraması yapmaya yönelik ayarları buradan yapabilirsiniz. Ayrıca kendiniz bir robots dosyası oluşturup ona göre de tarama yapabilirsiniz.

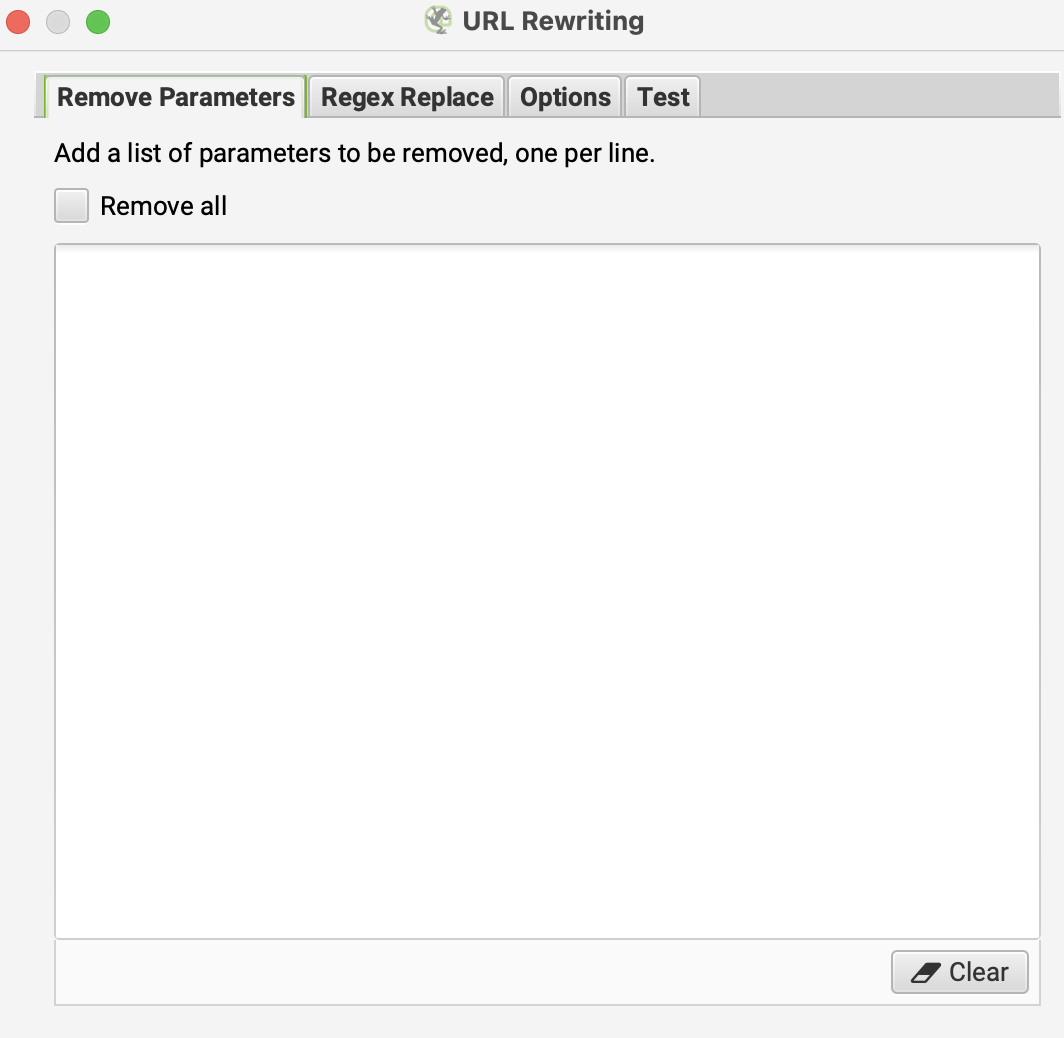

URL Yeniden Yazma: Arama sonuçlarında görmek istemediğiniz URL’leri buradan belirtebilirsiniz. URL’leri yeniden yazabilirsiniz. Örneğin, www’li URL’leri www’siz olacak şekilde ya da .co.uk uzantılı alan adlarını .com uzantılı olacak şekilde Regex kurallarıyla düzenleyebilirsiniz. Ayrıca test sitenizin alt alan adındaki URL’leri, canlı sitedeymiş gibi göstermek için de bu alanı kullanabilirsiniz.

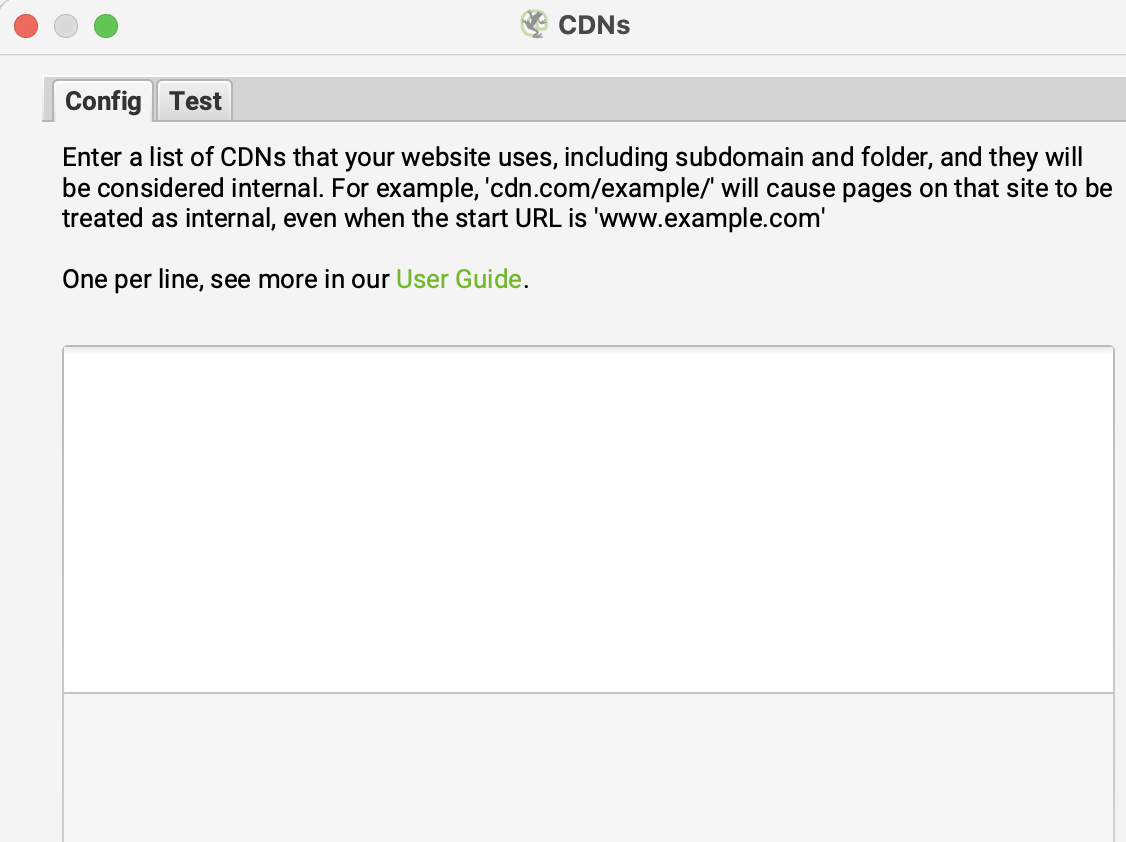

CDN: CDN sekmesi, Screaming Frog’un CDN hizmetinizin URL’lerini iç link olarak görmesini sağlayan bir özelliktir. Bu ayardan sonra CDN adresinizdeki bağlantılar Screaming Frog’un “Internal” sekmesinde görünecek ve daha fazla detay görüntülenecektir.

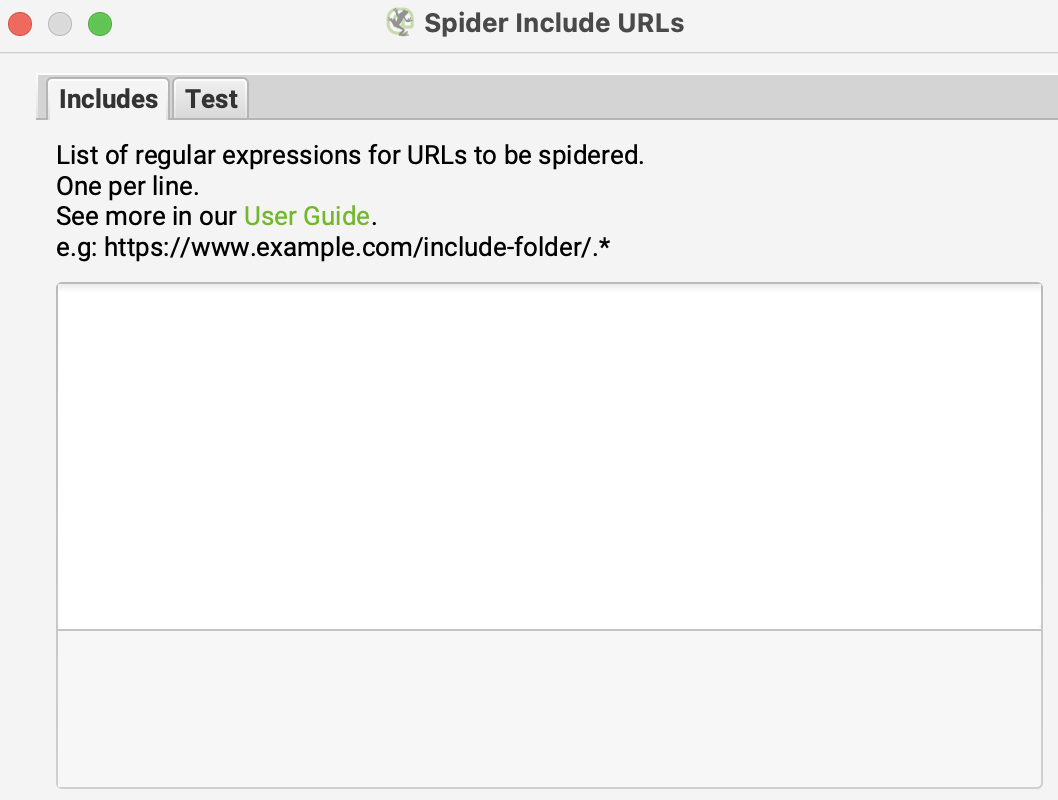

Include: Include özelliği, çok fazla URL’ye sahip sitelerde tercih edilecek URL’lerin taranmasında kullanılabilir. Bunun çalışabilmesi için taramaya başladığınız URL’nin regex ile eşleşen bir iç linki olması gerekir. Aksi halde Screaming Frog ilk URL’den başka bir URL tarayamaz. Ayrıca taramaya başladığınız URL de burada belirttiğiniz eşleşme kurallarına uymalıdır.

https://www.screamingfrog.co.uk/seo-spider/user-guide/configuration/#include

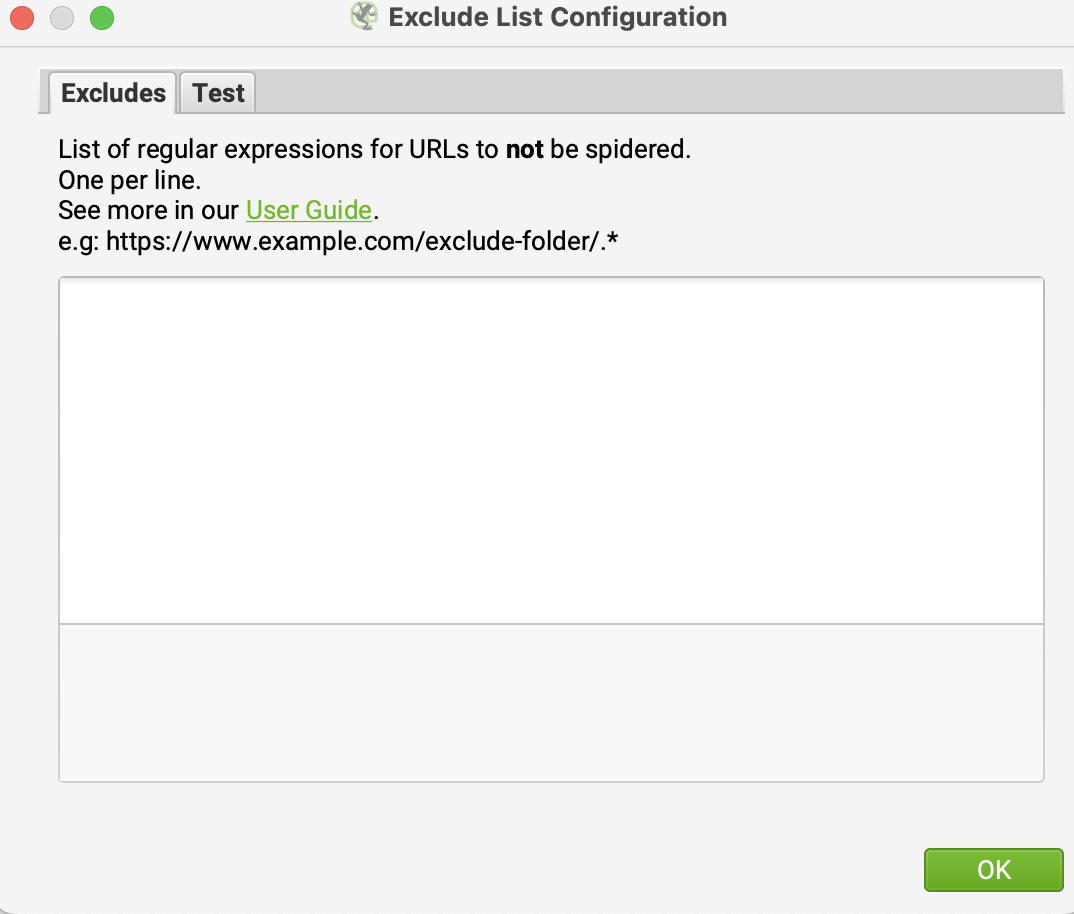

Exclude: Bu özellik, tarama sırasında taranmasını istemediğiniz URL’leri, klasörleri veya parametreleri hariç tutmanızı sağlar. Regex diliyle komutlar yazarak hariç bırakabilirsiniz. Ancak bu ayar, sadece tarama sırasında keşfedilen URL’lere uygulanır; taramaya başladığınız ilk URL için geçerli değildir.

https://www.screamingfrog.co.uk/seo-spider/user-guide/configuration/#exclude

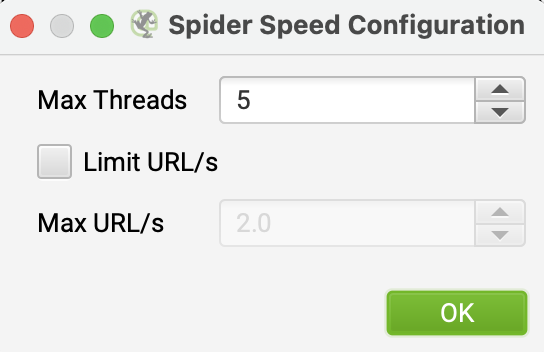

Hız: Bu alanda aracın saniyede kaç URL tarayacağını belirleyebilirsiniz. Varsayılan ayarlarda maksimum 5, saniyede taranan URL sayısı ise 2’dir. Taramak istediğiniz sitenin sunucu performansına göre tarama hızını düşürmeniz gerekebilir, aksi halde saniyedeki istek sayısı yüksek olursa 500 hatalarıyla karşılaşabilirsiniz.

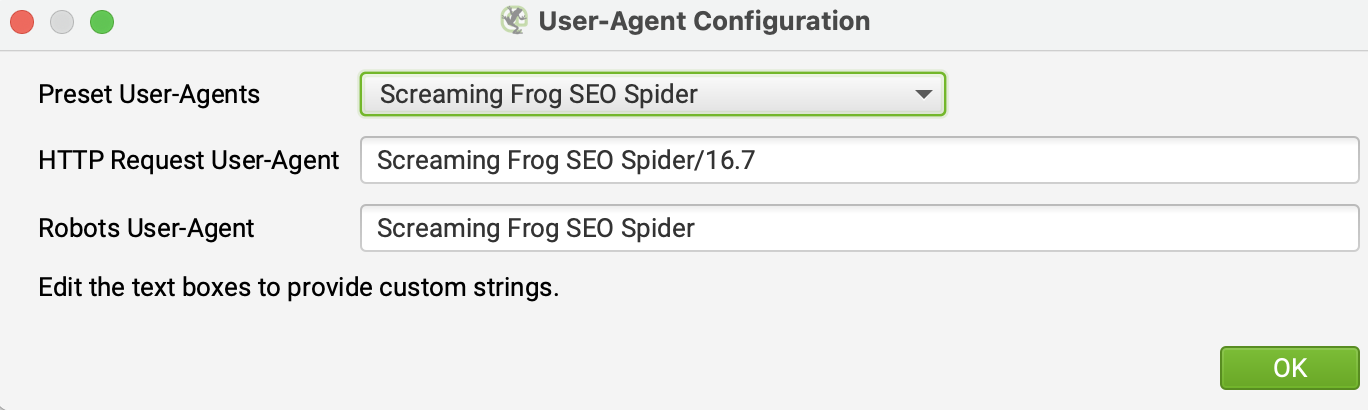

User-Agent: Tarama öncesinde sayfalara hangi User-Agent ile gidileceğini buradan ayarlayabilirsiniz. Bazı siteler, Screaming Frog User-Agent’ını engellemiş olabilir. Böyle bir durumda Screaming Frog User-Agent ile tarama yapılamaz. Farklı User-Agent seçenekleriyle sitenizin taranabilirliğini test etmek için bu alanı kullanabilirsiniz.

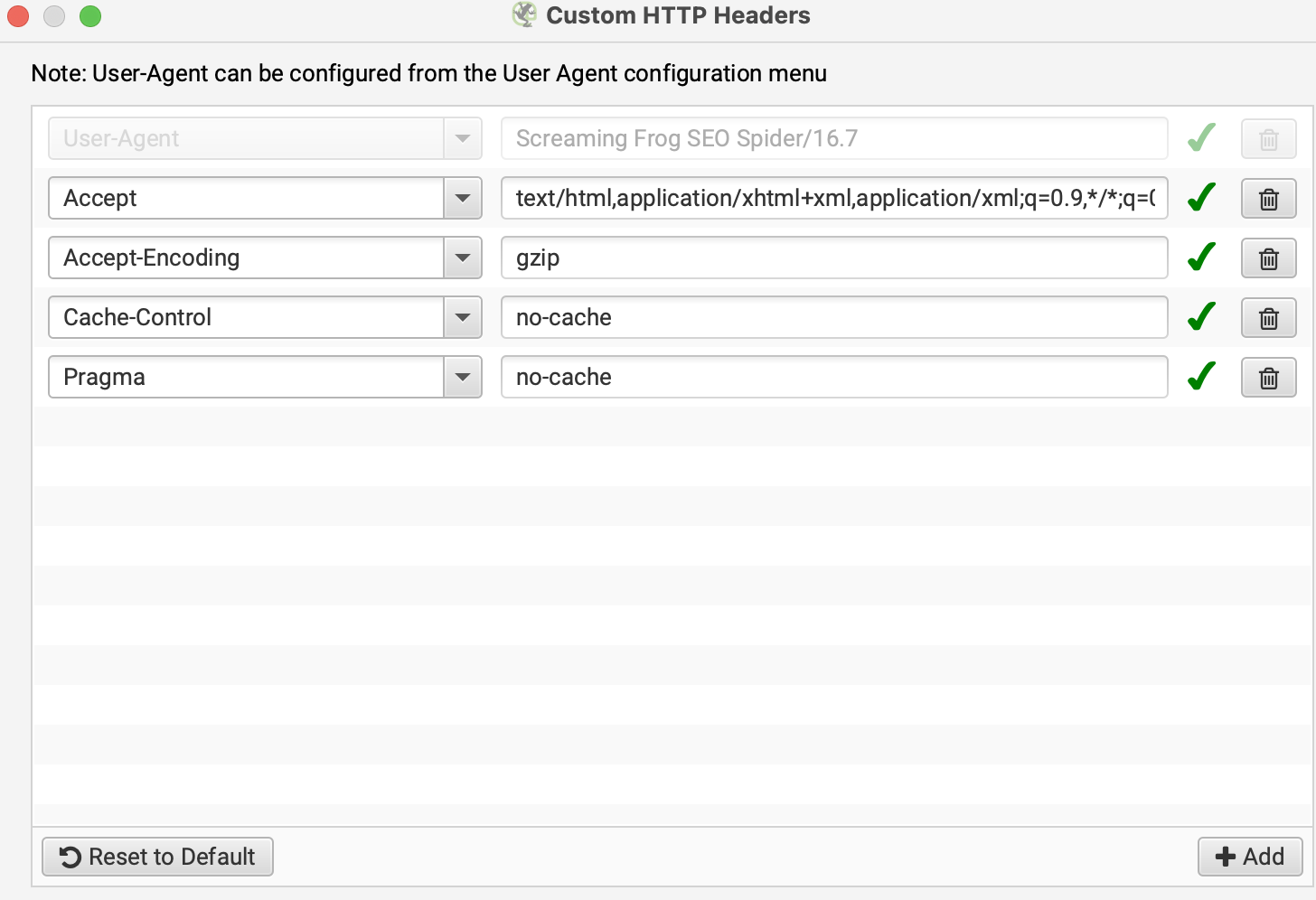

HTTP Header: HTTP Header seçeneği, Screaming Frog’da özel HTTP Header isteği göndererek tarama yapmanızı sağlar.

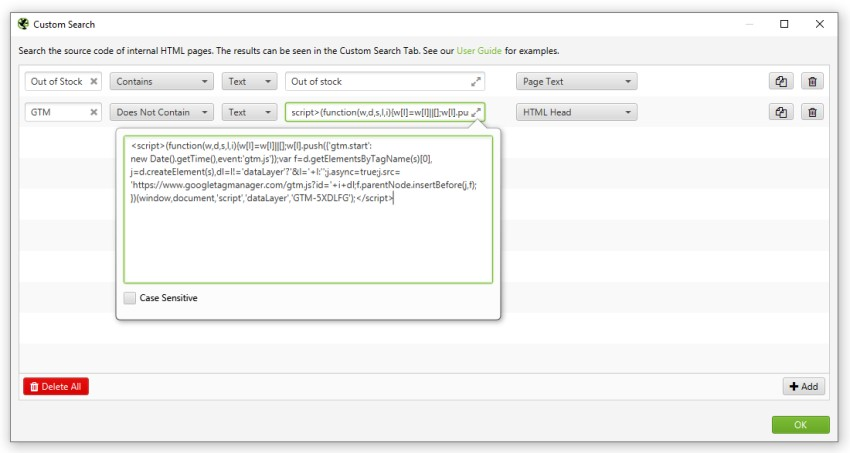

Özel Arama (Custom Search): Bu bölüm sayesinde Screaming Frog ile bir sitenin kaynak kodunda istediğiniz herhangi bir veriyi arayabilirsiniz. HTML’de belirlediğiniz değerin olup olmadığını Text veya Regex ile aratabilir, “Contains” veya “Does Not Contains” seçenekleriyle sonuç alabilirsiniz.

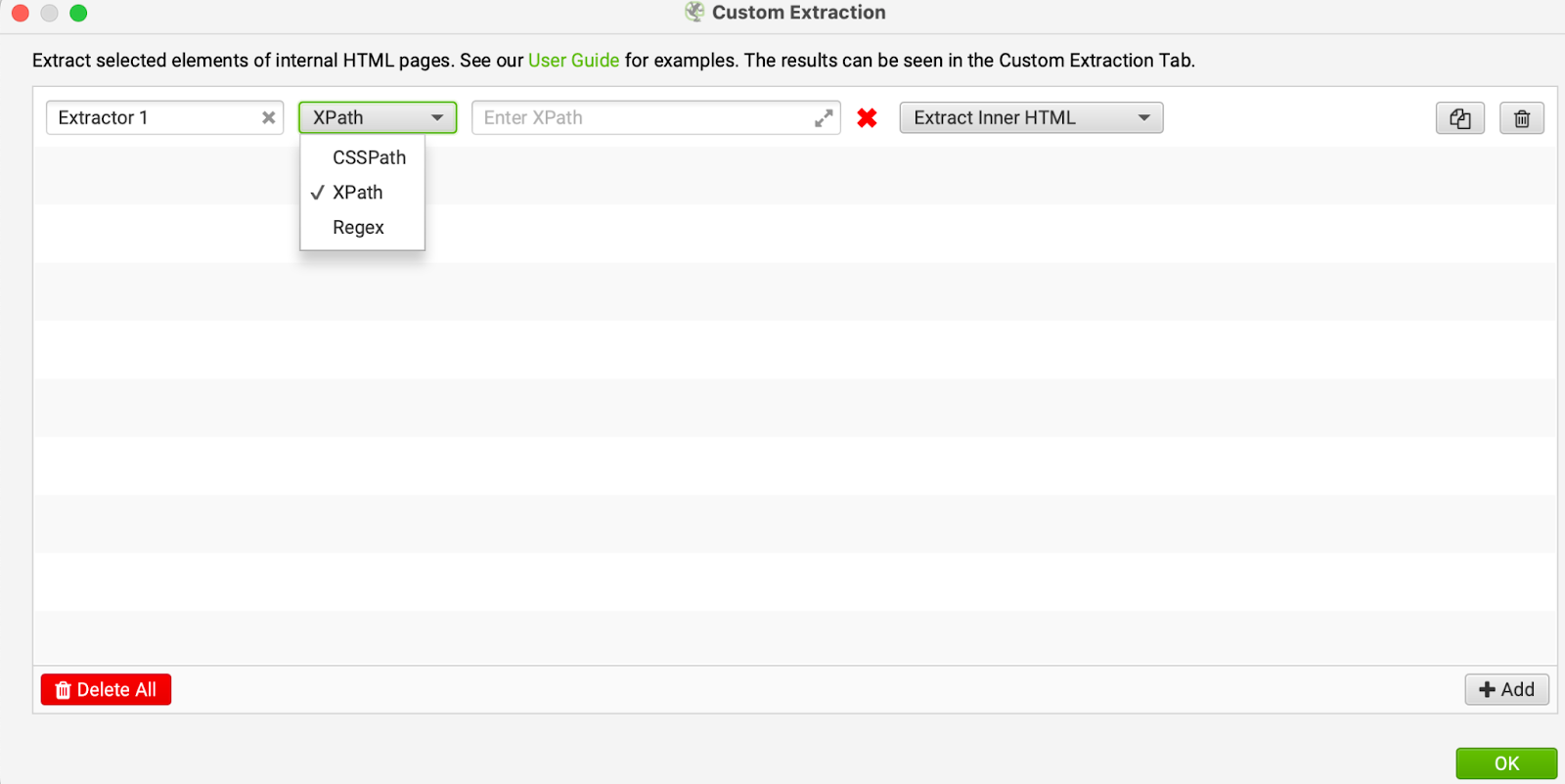

Özel Çıkarma (Custom Extraction): CSSPath, XPath veya Regex kullanarak bir sitenin HTML’inden veri çıkarmanıza olanak sağlar. Örneğin, bir e-ticaret sitesindeki ürün kodlarına erişebilirsiniz. Bu araç sadece 200 yanıt kodu dönen sayfalardan veri çıkarır. Statik HTML dışında render edilen veriler için JavaScript rendering moduna geçebilirsiniz.

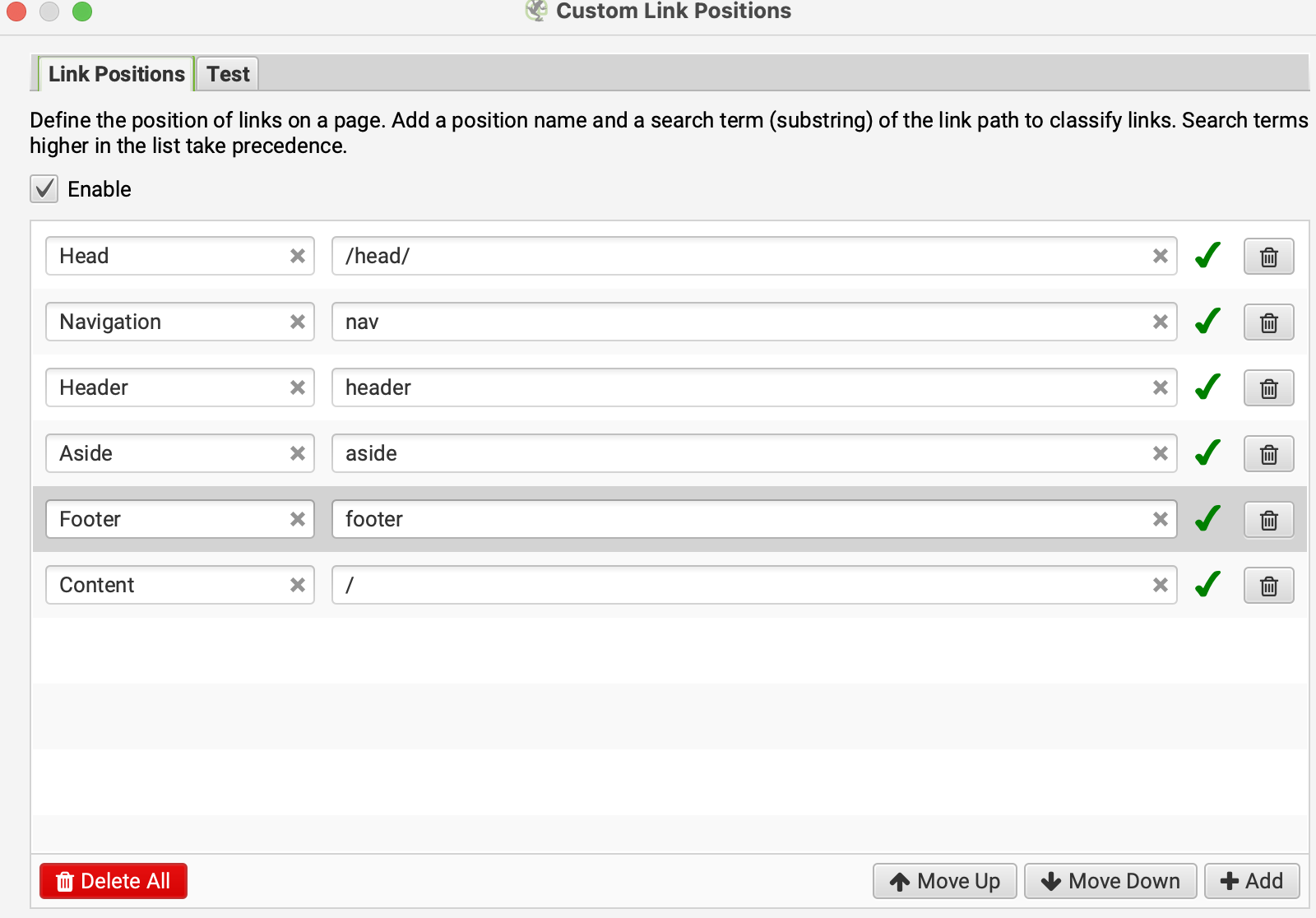

Özel Link Pozisyonları: Screaming Frog, bir web sayfasındaki içerik, kenar çubuğu veya altbilgi alanlarındaki linkleri tarar ve konumlarına göre kategorize eder. Bu araç sayesinde belirlediğiniz kriterlere uygun linklerin olup olmadığını kontrol edebilirsiniz.

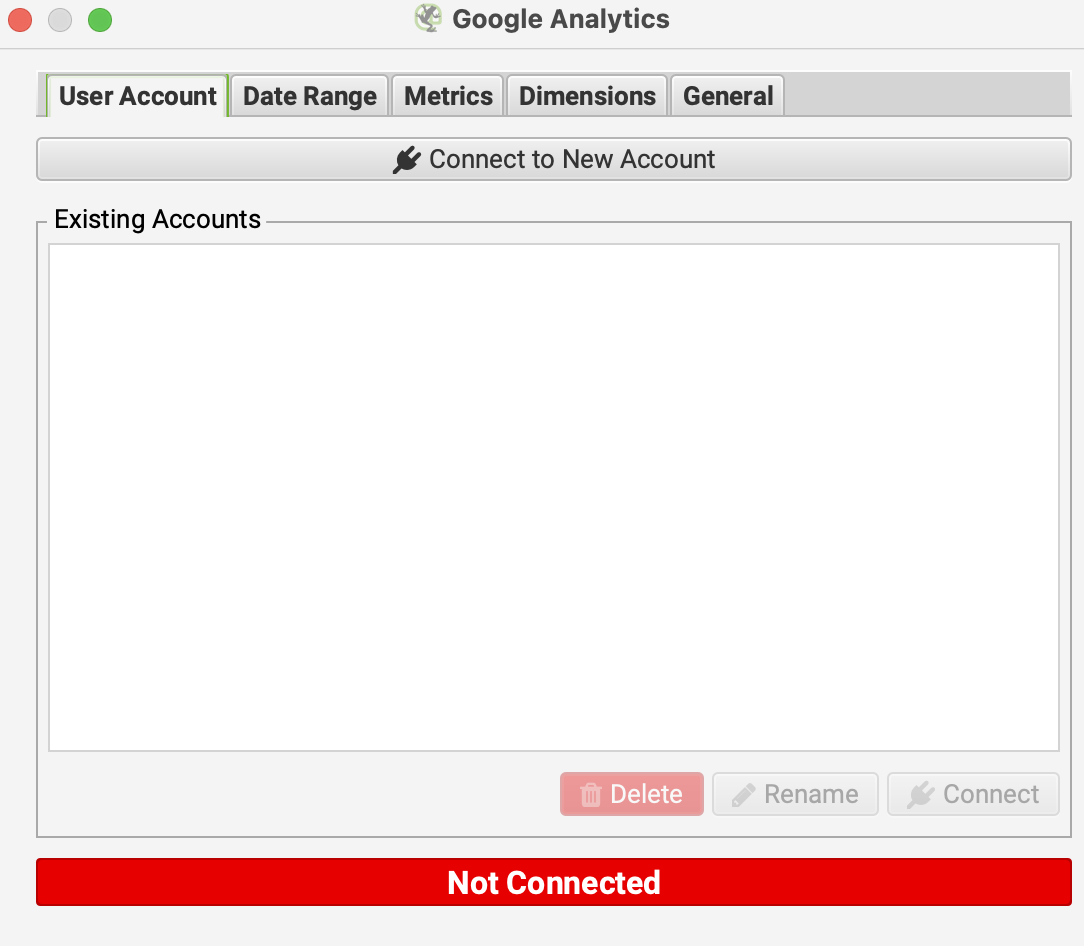

Google Analytics API: Google Analytics hesabınızı Screaming Frog’a bağlayarak daha detaylı tarama yapabilirsiniz. Bağlantıyı yapmak için API Access menüsünden Google Analytics sekmesine tıklayıp “Connect to New Account” butonuna basmanız yeterlidir.

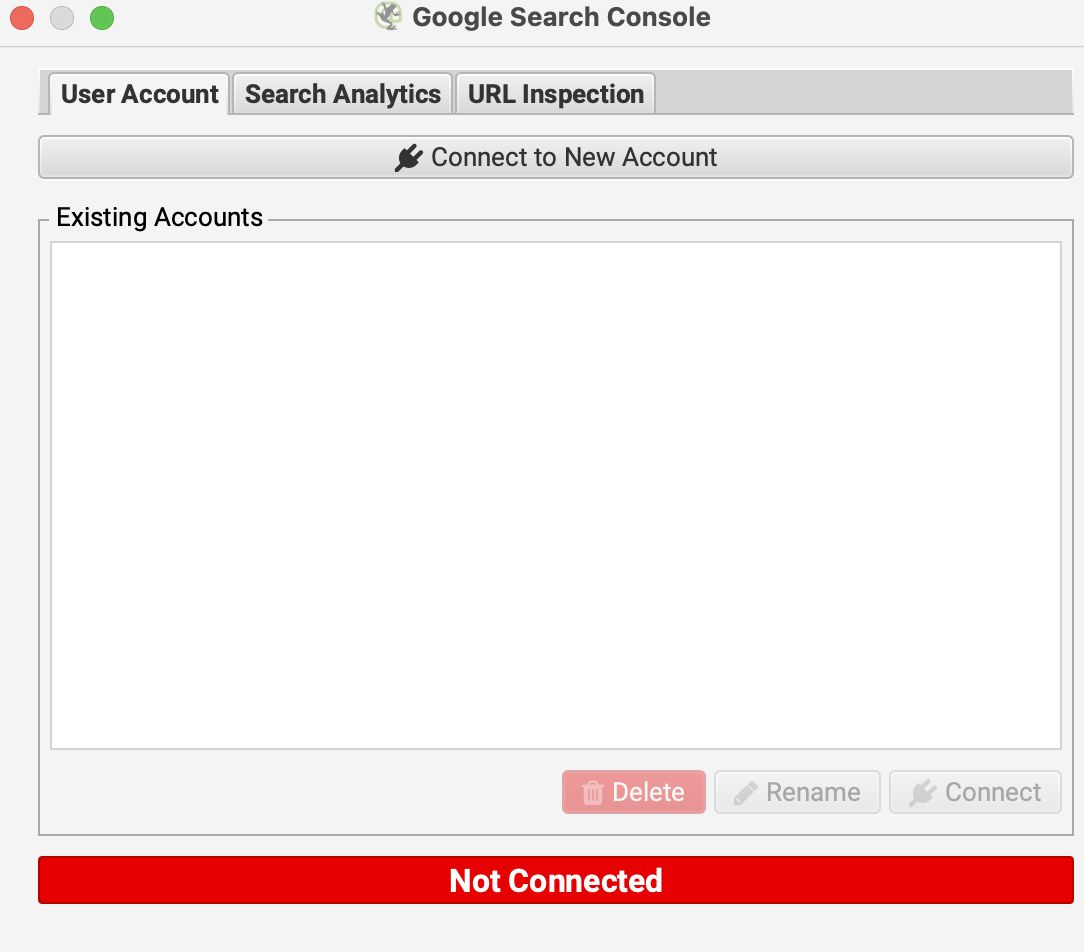

Search Console API: Search Console bağlantısı için API Access menüsünden Google Search Console sekmesine tıklayın, açılan pencerede “Connect to New Account” butonuna basarak hesabınızı seçin ve izinleri verin. Böylece Google tarafından daha önce taranmış fakat şu anda sitenizde olmayan URL’leri de kontrol edebilirsiniz.

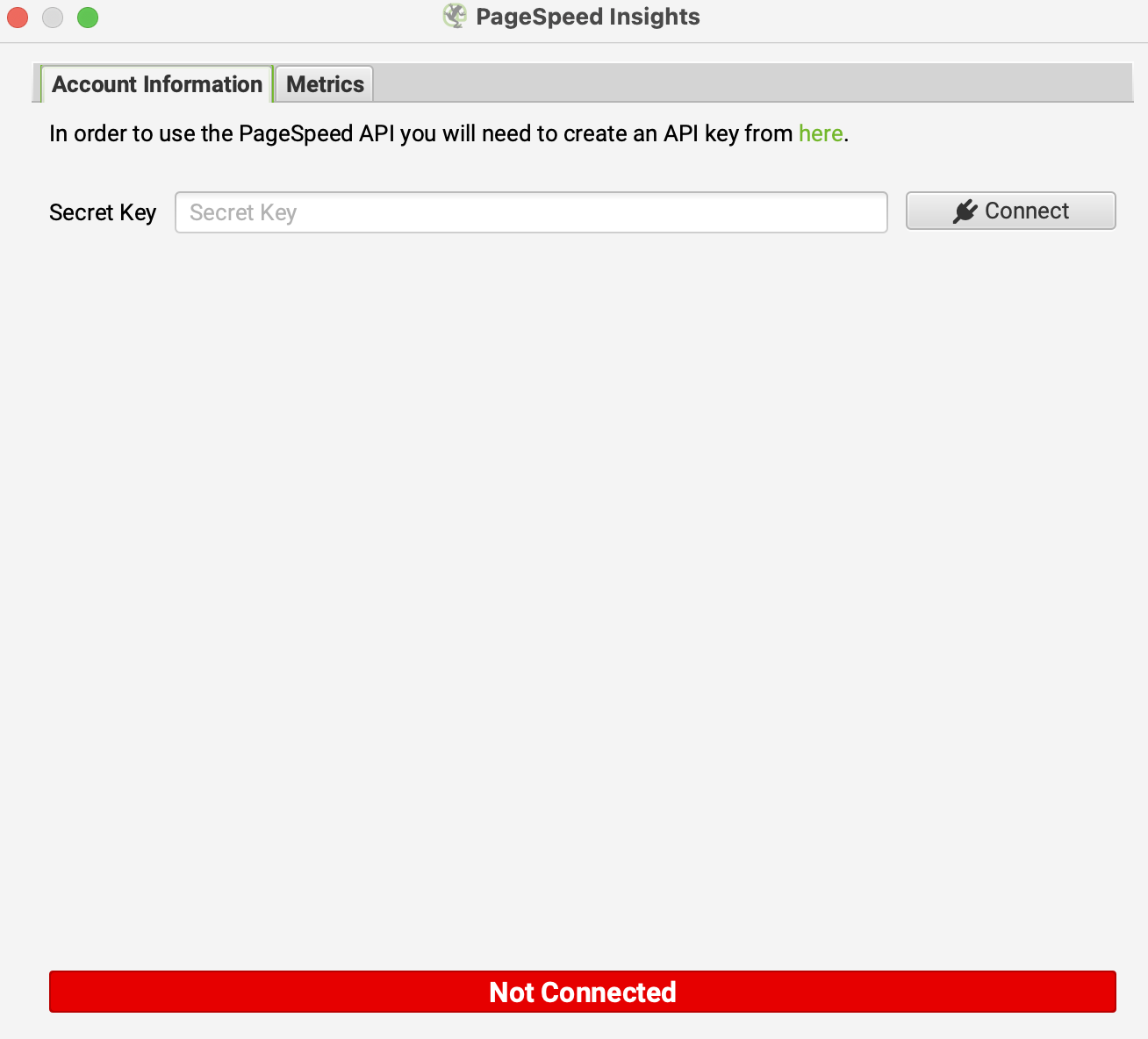

Page Speed Insight API: PageSpeed Insights’tan elde edilen hız değerlerini Screaming Frog taraması ile birlikte görmek için bu bölüme Secret Key bilgilerinizi girmeniz gerekir. Böylece taranan URL’lerin hız performans metriklerini görebilirsiniz.

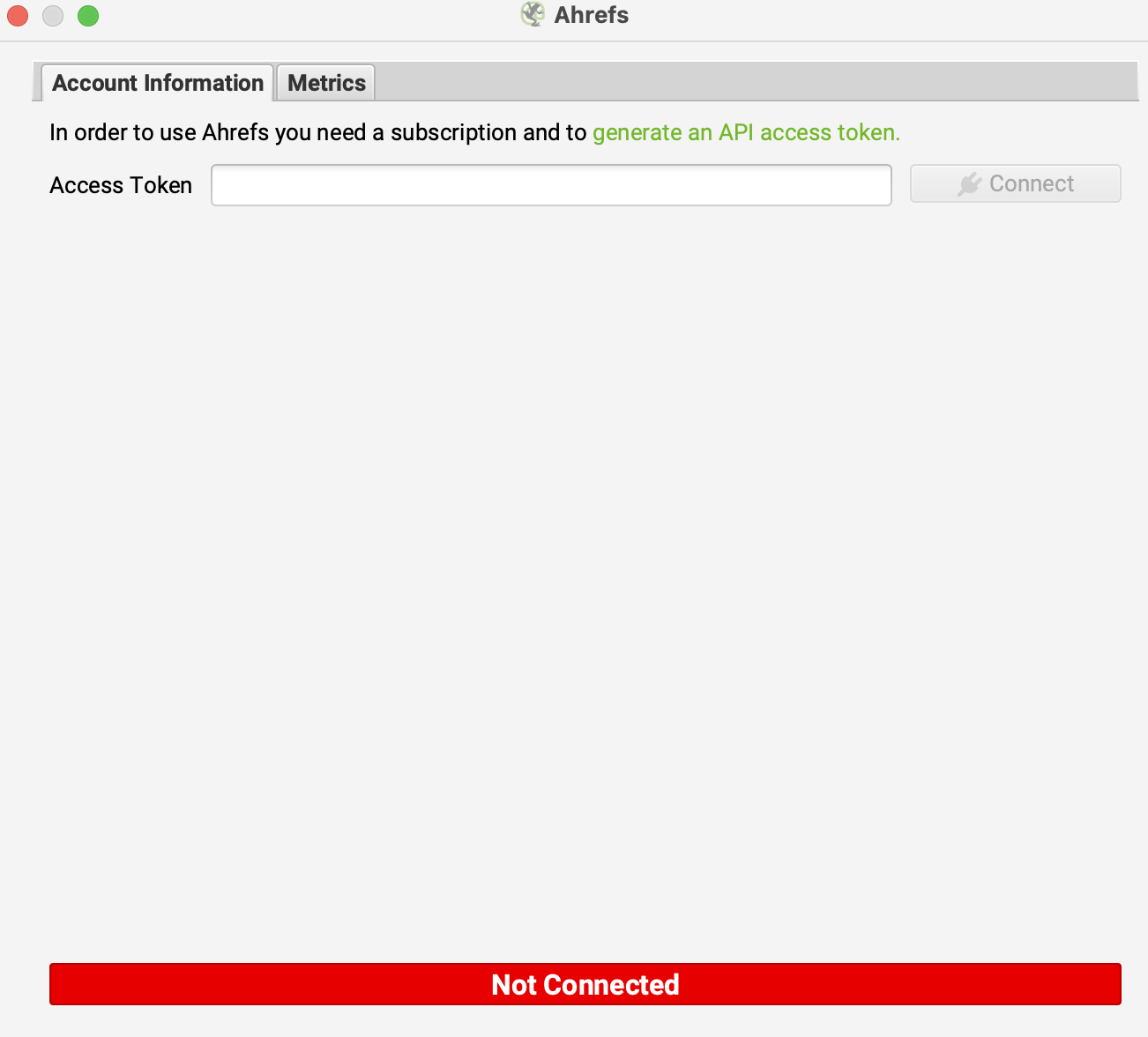

Ahrefs API Bağlantısı: Screaming Frog tarama sonuçlarında Ahrefs verilerini görmek isterseniz, Ahrefs hesabınızı Screaming Frog’a bağlayabilirsiniz. Bunun için API Access menüsünden Ahrefs sekmesine tıklayıp Access Token bilgilerinizi girmeniz yeterlidir.

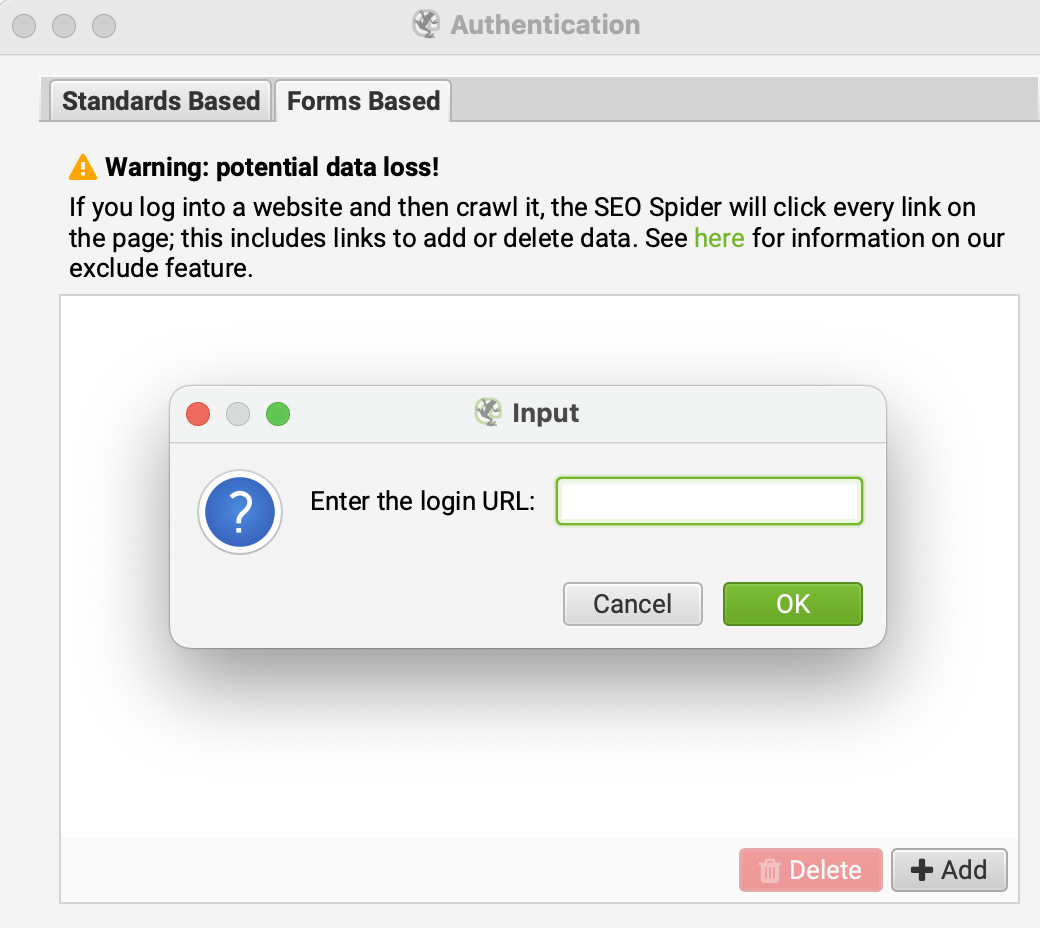

Kimlik Doğrulama (Authentication): Eğer taramak istediğiniz site kullanıcı girişi sonrasında çalışıyorsa, Screaming Frog’un “Forms Based” bölümünden giriş URL’sini, kullanıcı adı ve şifrenizi kaydedebilirsiniz. Böylece bot, önce giriş yapacak ve sonrasındaki sayfaları da tarayabilecektir.

More resources

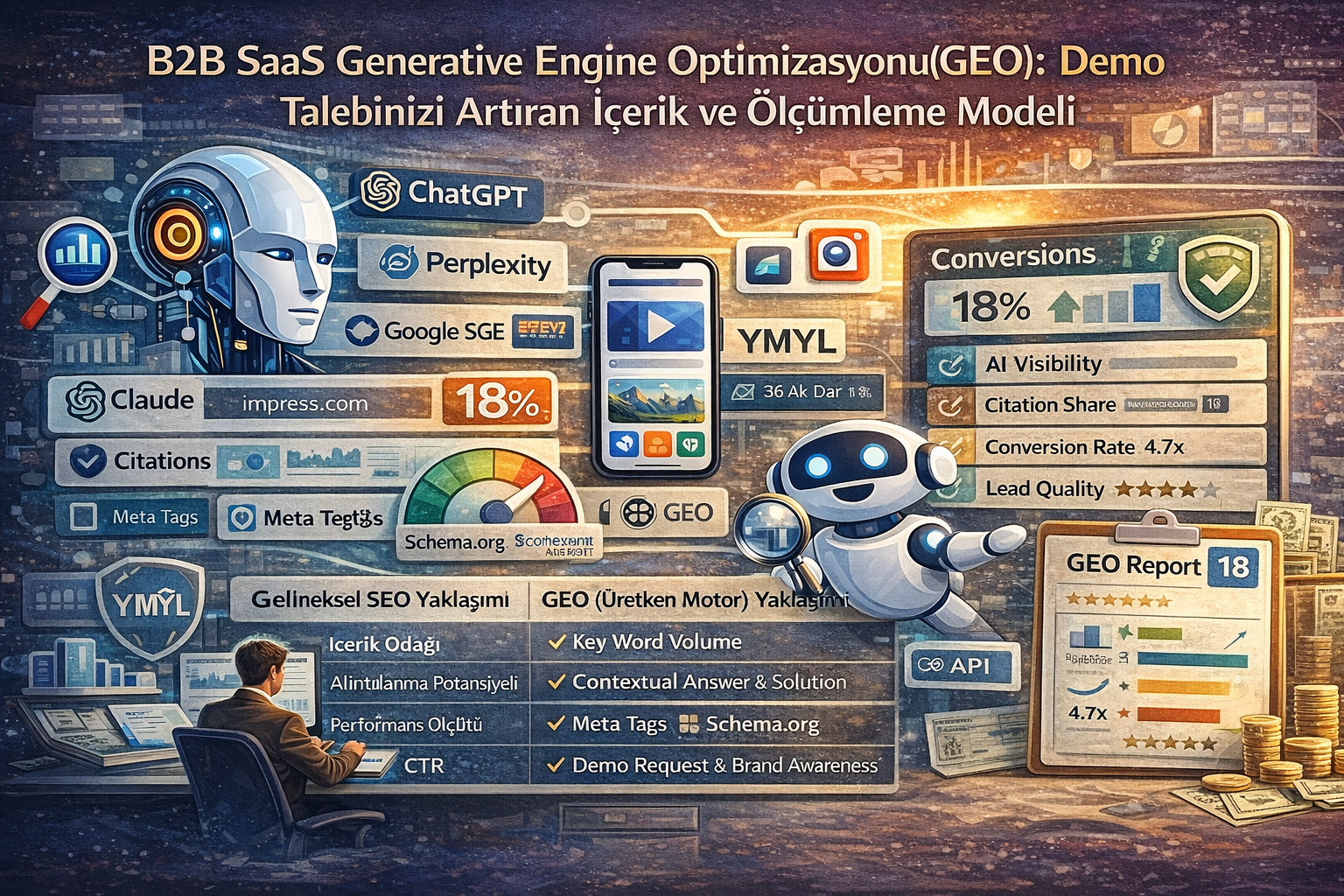

B2B SaaS Generative Engine Optimizasyonu (GEO): Demo Talebinizi Artıran İçerik ve Ölçümleme Modeli

Dijital pazarlama dünyası, geleneksel arama motoru optimizasyonundan (SEO), yapay zeka odaklı arama...

Source Term Vector Nedir?

Source Term Vector, bir web sitesinin zaman içinde ürettiği içerikler, bu içeriklerin eşleştiği sorg...

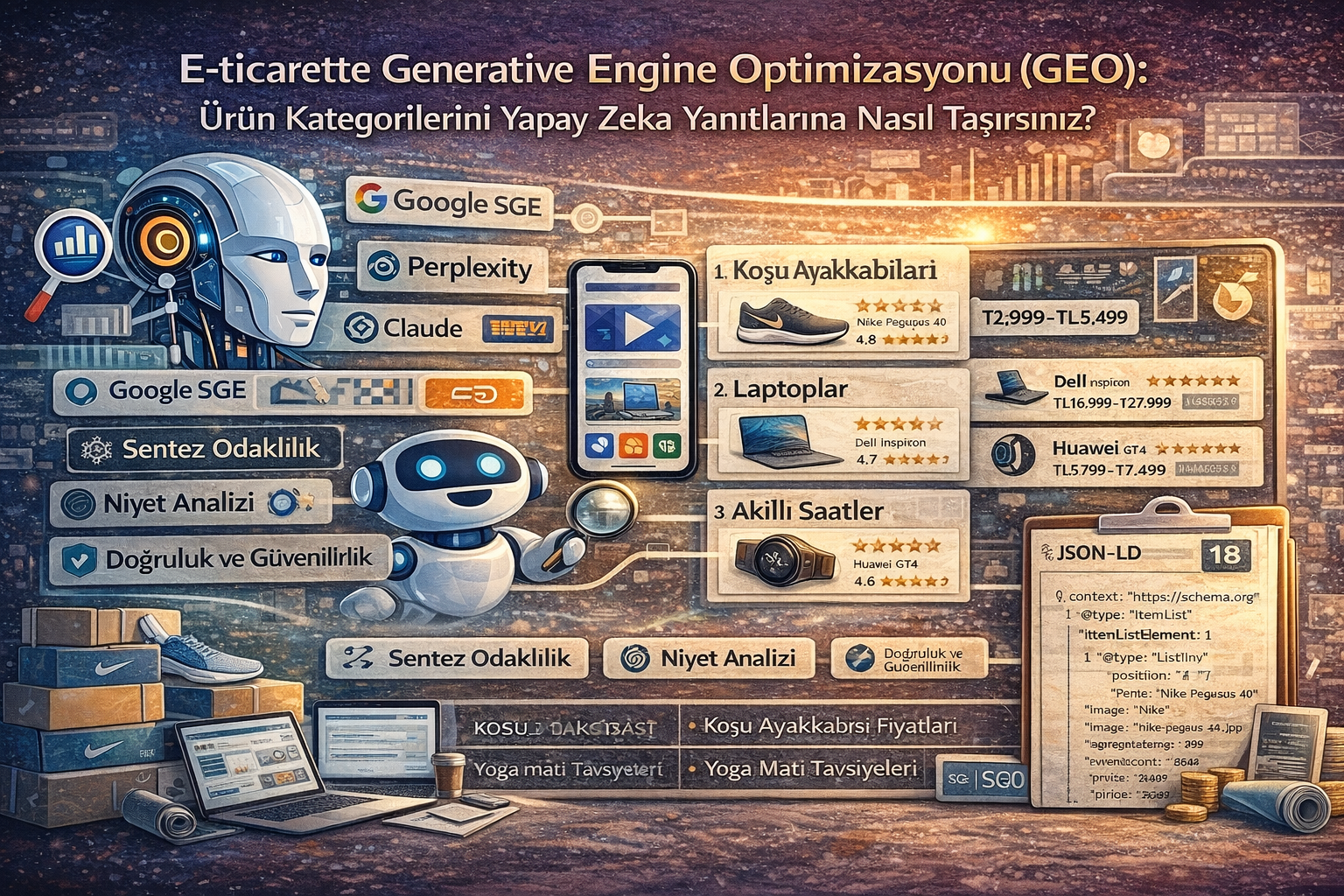

E-ticarette Generative Engine Optimizasyonu (GEO): Ürün Kategorilerini Yapay Zeka Yanıtlarına Nasıl Taşırsınız?

Dijital pazarlama dünyası, yapay zeka teknolojilerinin arama motoru deneyimini kökten değiştirmesiyl...